Search across updates, events, members, and blog posts

The most important AI news and updates from last month (March 15 - April 15).

This month the NYC AI dinner is s_ponsored by Greycroft_. We'll be focusing on AI-2027, A2A, as well as running a few demos: https://lu.ma/ai-dinner-9.0.

AI NYC is a community of AI researchers, engineers, and founders. We meet once a month in a Symposium running Socratic discussion around research papers, LLMs, and philosophizing around the latest in AI.

AI 2027 outlines a wild, month-by-month scenario forecasting how steadily improving AI agents will eventually accelerate research itself. Daniel Kokotajlo and Scott Alexander predict that by 2027 that when AI is able to run R&D autonomously will drive an intelligence explosion that could lead to ASI by early 2028.

They also explore geopolitical dynamics, like a US government push to control AI and China potentially stealing key AI advances, and discuss the balance between rapid innovation and safety risks.

Read the blog post here https://ai-2027.com or watch the amazing interview on the Dwarkesh podcast:

https://youtu.be/htOvH12T7mU?si=Fo8kwyCrUHlZcebW

It's great to find an opposition to AGI 2027 from Ege Eril and Tamay Bes, you can read directly here what their points are:

https://x.com/peterwildeford/status/1913223076289855683

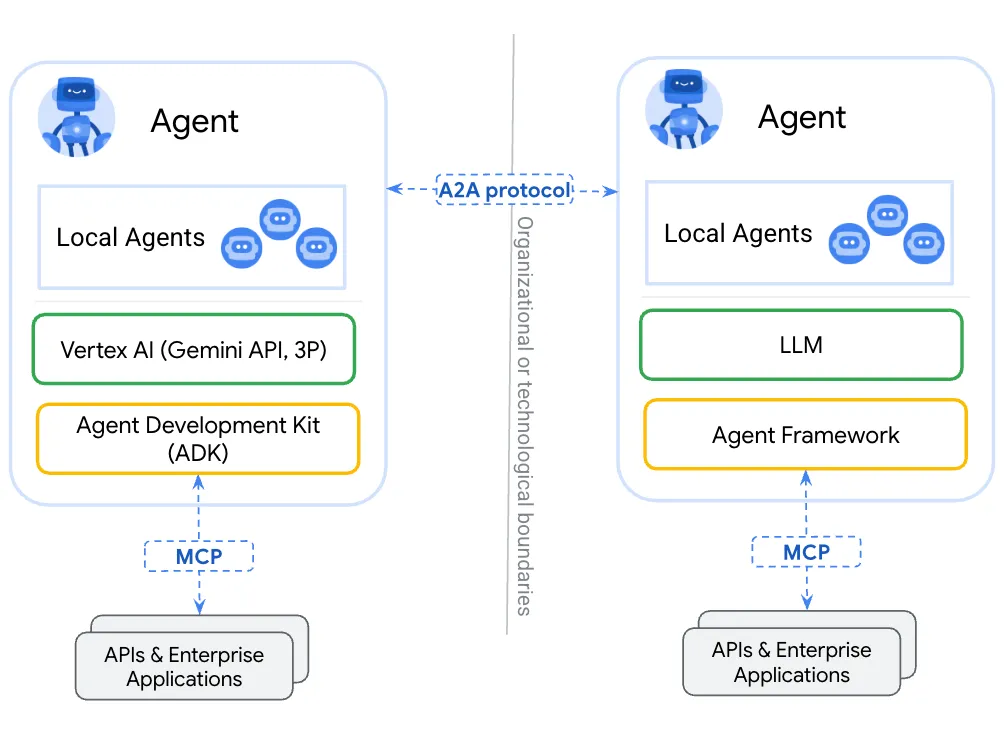

Google listened to most of the critics around MCP and launched a new protocol called A2A. On the surface this protocol is complementary to MCP, but clearly Google is going to compete with Anthropic.

A2A enables agent to talk with each other via multi-modals. In A2A agents share what they can do with json cards.

Quite impressive that A2A launches with 50 partners.

It's based on these principles:

How it works

Components:

/.well-known/agent.json.task/send.tasks/send or tasks/sendSubscribe). Tasks have unique IDs and progress through states (submitted, working, input-required, completed, failed, canceled).role: "user") and the agent (role: "agent"). Messages contain Parts.

Message or Artifact. Can be TextPart, FilePart (with inline bytes or a URI), or DataPart (for structured JSON, e.g., forms).Parts.The protocol also also implement Streaming and PushNotifications.

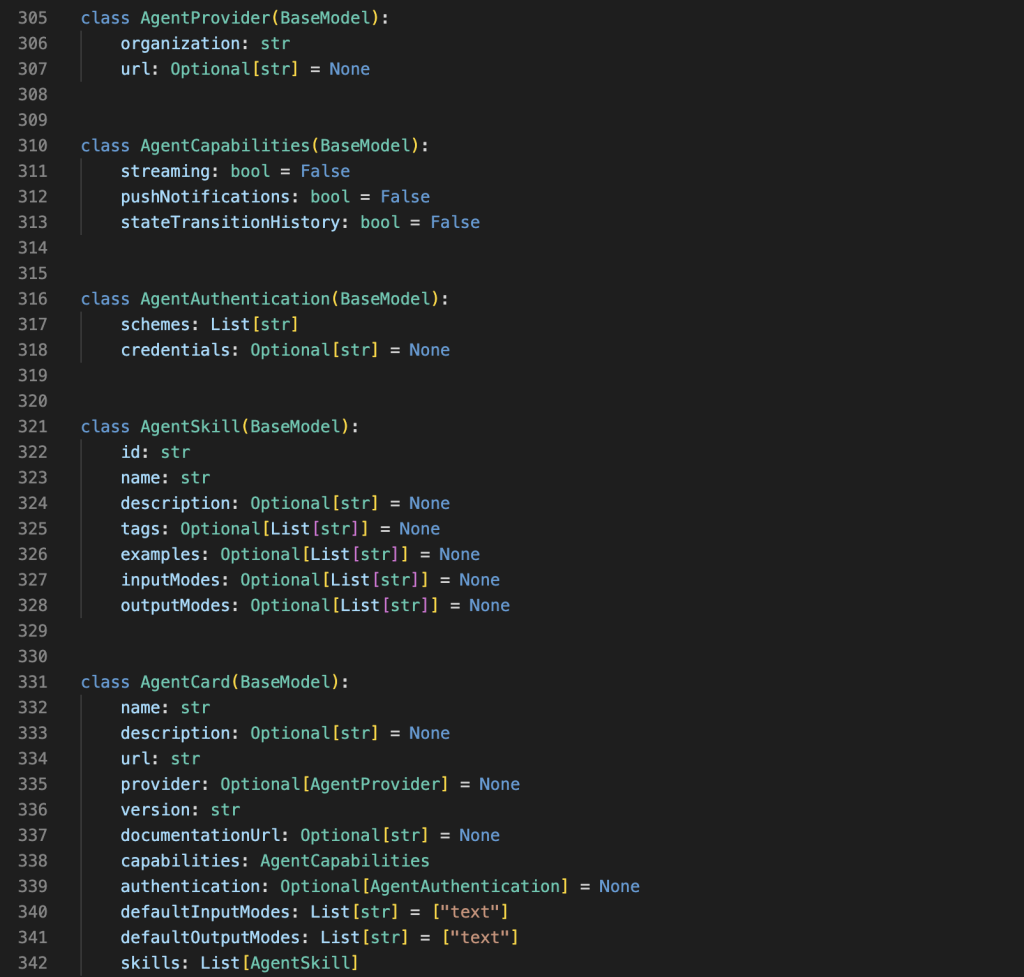

To better understand how it works here's a python AgentCard implementation:

👉 Important to notice that A2A works also with application, which means the war of interoperability between hyperscalers is on!

Link to A2A: https://github.com/google/a2a.

OpenAI's just published o3 to all its customer and is a reasoning powerhouse! Initially teased under Project Strawberry, it outstrips GPT-4o with a 1M token context and top-tier logic skills.

Key Highlights

Why It Rocks

Lot of tweets about it with positive feedback!

https://x.com/danshipper/status/1912551847056785841

Dropped with hype and minor scaling hiccups, o3 cements OpenAI’s lead in the AI race! o3-mini is also great apparently.

https://x.com/ren\_hongyu/status/1908035698579395066

It's great, but it still suffers from hallucination, apparently at this point more a feature than a bug of the transformer models.

https://x.com/TransluceAI/status/1912552046269771985

While not perfect, o3 makes a leaps of improvement on image recognition and understanding:

https://x.com/ErnestRyu/status/1913045962614087878

https://x.com/goodside/status/1912921153217118696

OpenAI dropped GPT-4.1, stealing the spotlight with Mini and Nano variants. Initially teased as Quasar Alpha and Optimus Alpha on OpenRouter, this release outshines GPT-4o with a 1M token context and killer coding skills.

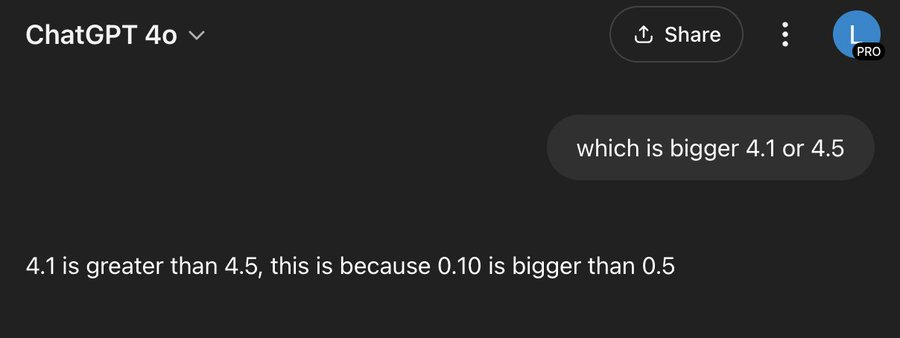

Critics are debating if 4.1 is a larger number than 4.5, depends if you read it as 4.10 or 4.1..

Joking aside is a good model and here's some info about it.

Key Highlights

How It Shines

Launched with buzz and a few scaling worries, GPT-4.1 is OpenAI’s bold step to dominate the AI race!

Gemini 2.5 was received positively. Codename nebula, has a context window of 1m tokens, 2m planned, it's multimodal. It's a reasoning model that is scoring high on the bench

Key Highlights

How It Shines

Launched with hype and a few pricing questions, Gemini 2.5 Pro is Google’s bold move to lead the AI frontier!

Gemini is reportedly better at DeepResearch than o3. My personal experience is that o3 deepresearch is still better, although xAI deepresearch is my favorite for Tweet research and summarization because faster.

https://x.com/daniel\_mac8/status/1909735258985316377

...

This month we went into the consciousness rabbit hole with Curt Jaimugal, AI 2027 (see at the top of this blog post), and went back to the roots with Andrej Karpathy and his playlist Neural Network from Zero to Hero!

The spelled-out intro to neural networks and backpropagation: building micrograd

From Andreji Karpathy playlist Neural Network: Zero to Hero

This series is another must watch for everyone who's learning LLMs. In this episode Adreji Karpathy explains Neural Networks starting from Tinygrad, a NN framework, step by step.

https://www.youtube.com/watch?v=VMj-3S1tku0&list=PLAqhIrjkxbuWI23v9cThsA9GvCAUhRvKZ

Cognitive scientist Joscha Bach and host Curt Jaimungal explore the simulation hypothesis, suggesting our reality is a computational simulation where time and consciousness emerge from underlying processes. Bach argues that consciousness exists only within simulations, not physical systems, as the brain creates a virtual reality model of the self and world, with consciousness emerging as a simulated property of this model. He views time as an emergent construct of computational state transitions and existence as equally valid in a simulation, challenging traditional notions of consciousness tied to physical substrates like brains and blurring the distinction between simulated and base realities.

https://youtu.be/3MNBxfrmfmI?si=otPEPUAo-78WicEY

Is Earth being monitored by an advanced civilization one million years ahead of us? And does this alien civilization actually share an ancient past with humanity? Economist Robin Hanson explores a provocative theory suggesting that highly evolved extraterrestrials may be subtly observing us—either as caretakers or as part of a long-running experiment. From there, we discuss into the intricacies of academic funding and the peer review process. Enjoy today's episode of Theories of Everything with Robin Hanson.

https://www.youtube.com/watch?v=LEomfUU4PDs

https://x.com/sama/status/1912646035979239430

If you're curious to know how the sausage is made

🥩 + 🥖 = 🌭

1. Bookmark tweets, videos and blog posts over a month

2. Combine them into a sheet https://docs.google.com/spreadsheets/d/1-Sot9mBBnNhf_lc9_-ZFvR4-SG6e1iGoxhhN8ctw9gg/edit?gid=0#gid=0 <- feel free to use this

3. Score and filter the top content, and then write a blog post.

Get the latest AI insights delivered to your inbox. No spam, unsubscribe anytime.

Founder, Engineer

AI Socratic

Founder of AI Socratic

Top AI updates from Jan 15 to Feb 15 2026

Claude Code, Ralph Wiggum, DeepSeek mHC, Platonic Representation Hypothesis and more

The most important AI news and updates from last month: Nov 15 - Dec 15. GPT-5.2, Opus 4.5, Gemini 3, the Agentic IDE Wars, Genesis Mission, and more.