Search across updates, events, members, and blog posts

The biggest event in January has been the launch of DeepSeek R1, which shook the market pushing NVIDIA stock down by 20% in a few days.

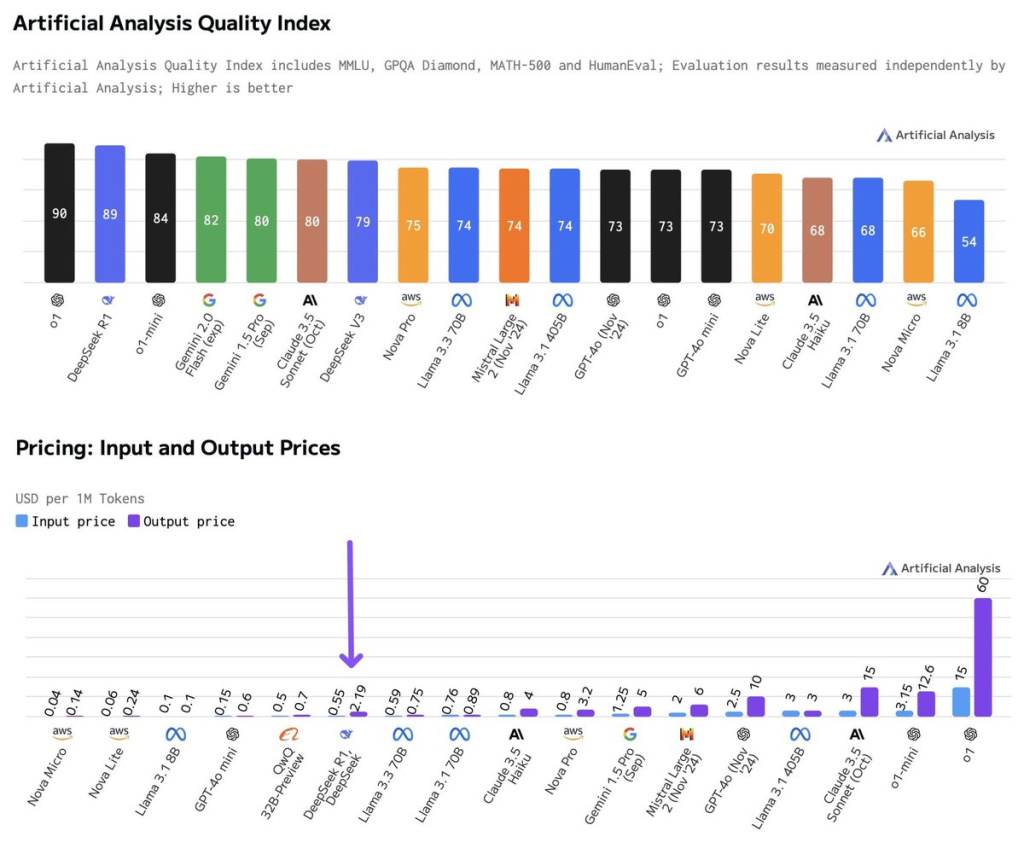

The reason behind this drop is that the team behind DeepSeek said the training costed only $5m, 1/30th of what OpenAI o1 is estimated to cost, with similar benchmark results. The model is open source but the weights are not.

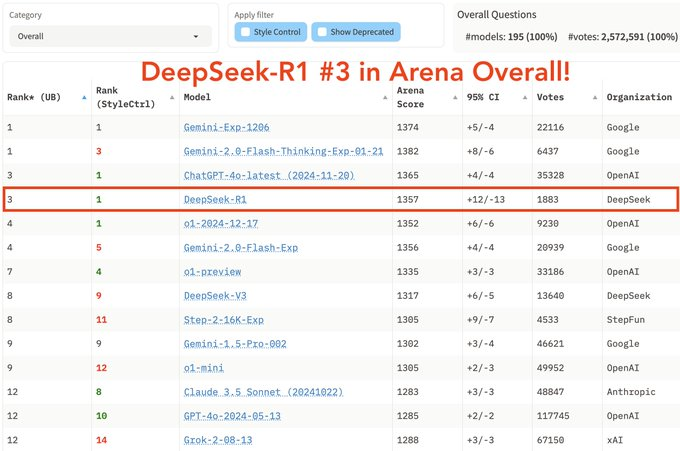

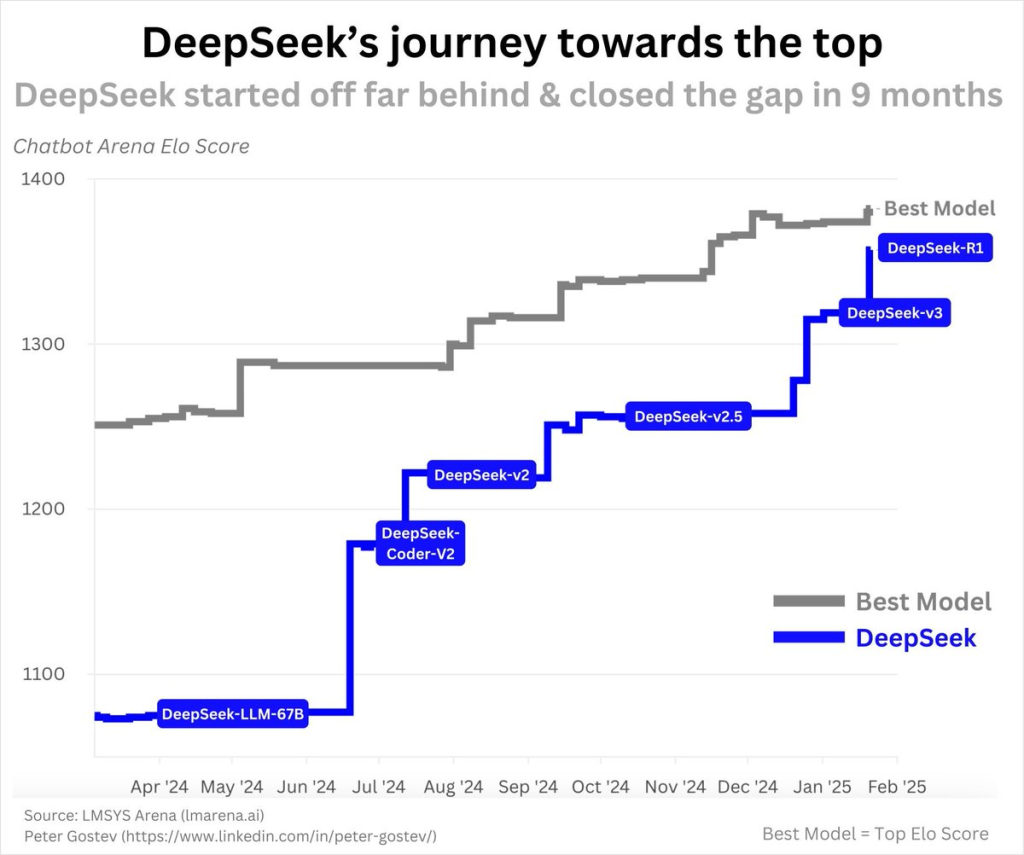

DeepSeek-R1 makes it to the top 3 of the Arena Chatbots. The initial take from the industry and social media, is that DeepSeek performs better than o1, at least anecdotally. Most AI influencers pushed the narrative of R1 with urgency and wow to capture attention. So while several benchmarks are positive for R1, not all of them captured the reality.

DeepSeek-R1 performs well in math but it's performing to a lower level when it comes to other tests, including the AIW test. The reality check only came after the market drop of course.

All the top leaders have some take on DeepSeek to mitigate the damage: Alexandr Wang from Scale AI said DeepSeek must have 50k NVIDIA H100s, but they can't disclose that information because of the chip export restrictions. Satya Nadella mentioned the Jevons paradox, saying that as AI gets more efficient and affordable, we'll see its use skyrocket. Dario Amodei called on harder chip restriction to China.

This blog post circulated few days before the big drop, it's worth a read to understand the feeling of the moment, and the bear narrative for NVIDIA.

DeepSeek looks like it appeared out of nowhere but their team has been constantly delivering improved LLMs.

This event had a list of winners and losers:

High Fly the edge fund that very likely shorted the $NVIDIA stock, and pushed the market in panic mode. DeepSeek won this trading battle and shoke up the US showing that China is not only catching up but also pushing to become an AI leader.

NVIDIA, despite the 20% price drop, is a clear winner since AI is clearly not just a hype moment, and as price drops, we'll need more chips not less.

Google, building models and selling inference has a weak moat, distribution is a strong moat and Google has an edge.

Hugging Face started reproducing R1 and fully open-sourced everything, including the weights.

OpenAI, which spent billions of dollars on research to build o1 and is currently losing money on the pro subscriptions. With the launch of o3-mini, Operator, and DeepResearch, OpenAI regained the lead shortly after. But open source is catching up, and none of Google, Meta, or OpenAI have no moat against open source.

The US: China is now leading across multiple sectors, including drones, robotics, and now they're catching up on AI.

Taiwan is probably at higher risk now, a fallout between US and China could result in an invasion.

The impressive part of DeepSeek R1 is that it is able to expand on DeepSeek V3 without Supervised Fine-Tuning (SFT). Discovering some of the core discovery for 🍓Q*, gpt o1 codename. Everything we see coming out of R1 is an emergent property of RL.

Here are 2 classes to better understand how DeepSeek R1 works:

https://www.youtube.com/watch?v=XMnxKGVnEUc&t=329s

The amount of learning from what happened is outstanding and at best this has been a necessary step to get all of us laser focus on the target.

Get the latest AI insights delivered to your inbox. No spam, unsubscribe anytime.

Founder, Engineer

AI Socratic

Founder of AI Socratic

Top AI updates from Jan 15 to Feb 15 2026

Claude Code, Ralph Wiggum, DeepSeek mHC, Platonic Representation Hypothesis and more

The most important AI news and updates from last month: Nov 15 - Dec 15. GPT-5.2, Opus 4.5, Gemini 3, the Agentic IDE Wars, Genesis Mission, and more.