Search across updates, events, members, and blog posts

The most important AI news and updates from June 15 to July 15.

Sign up to receive the mailing list!

We’ll discuss the top news and updates from this blog post using the Socratic methodolgy. As well as going through few presentations. lu.ma/ai-dinner-12.0.

This event is sponsored by the Solana Foundation

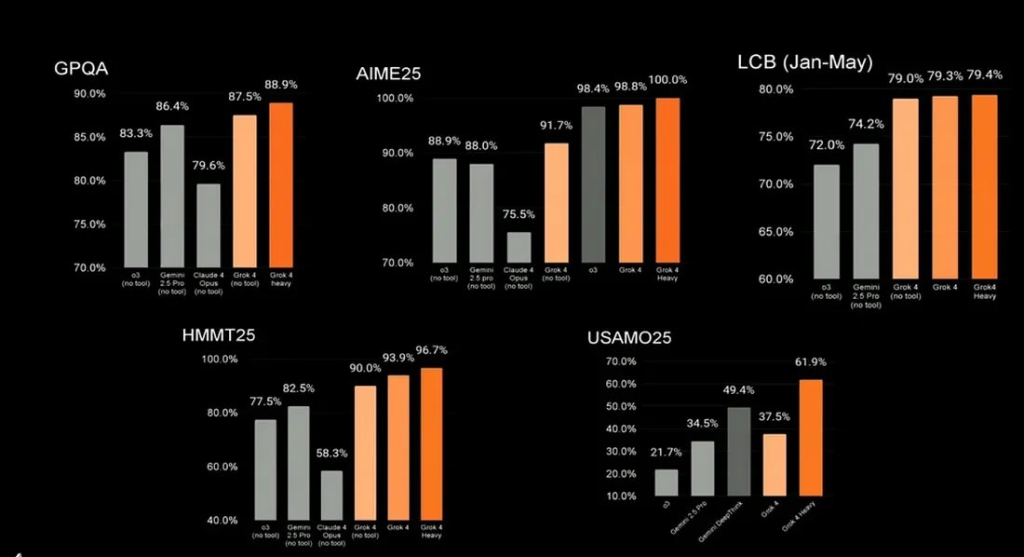

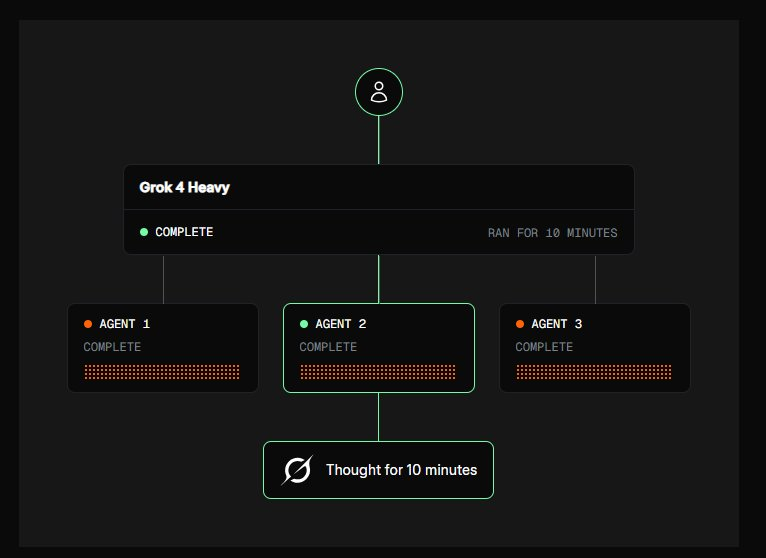

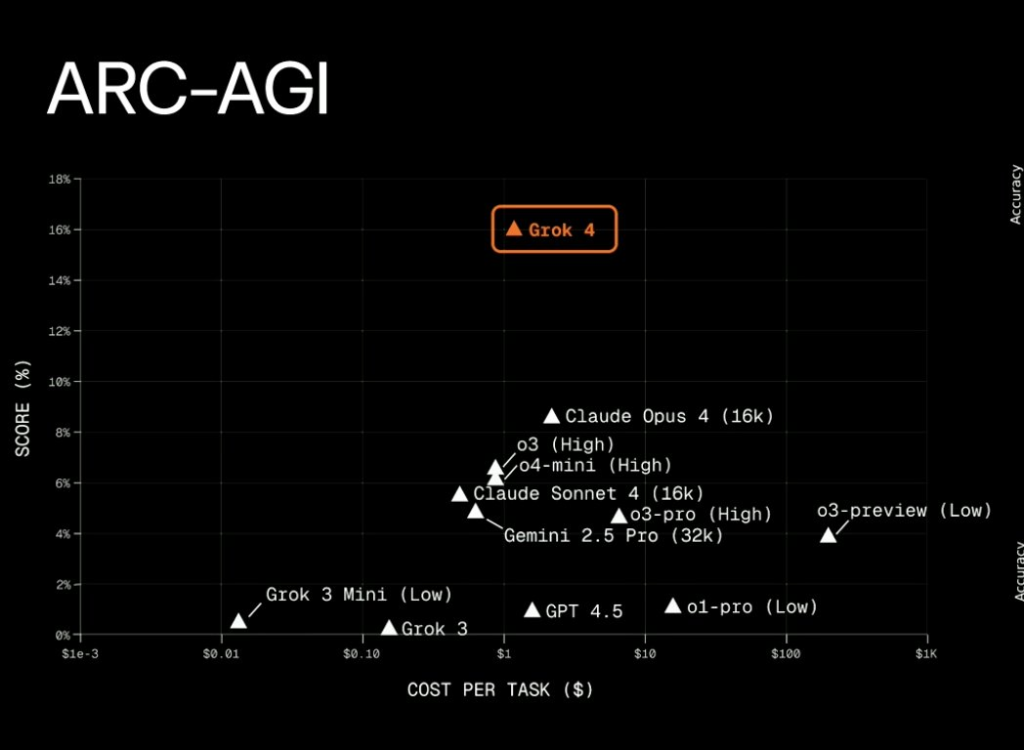

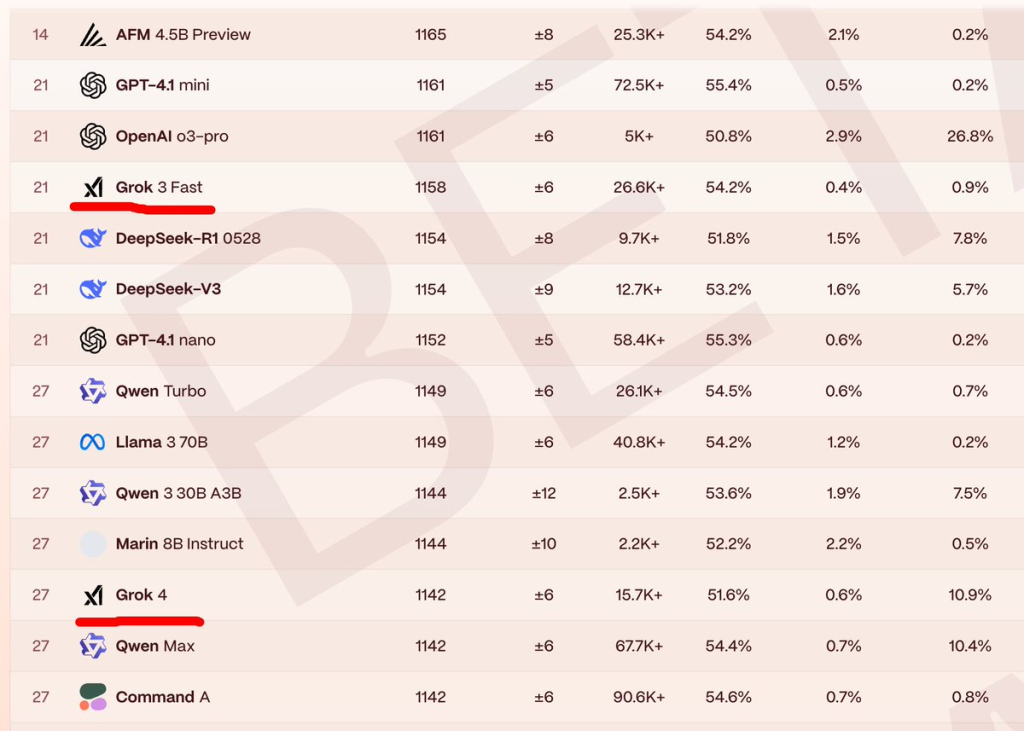

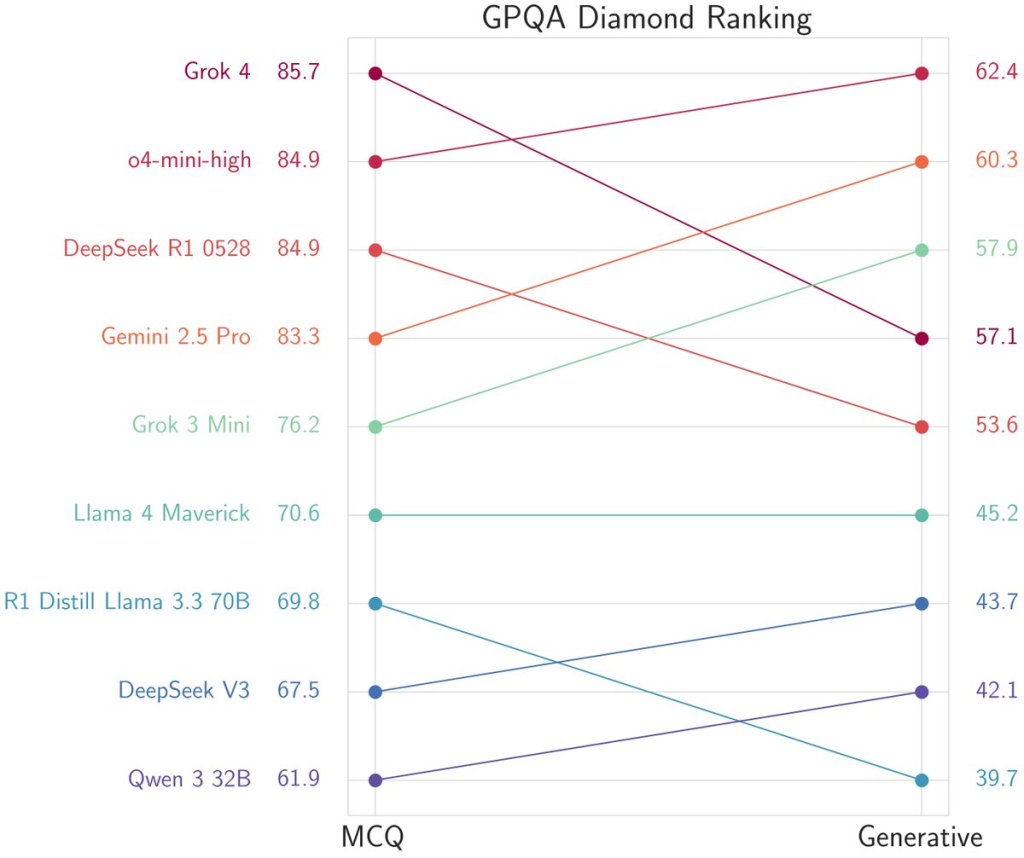

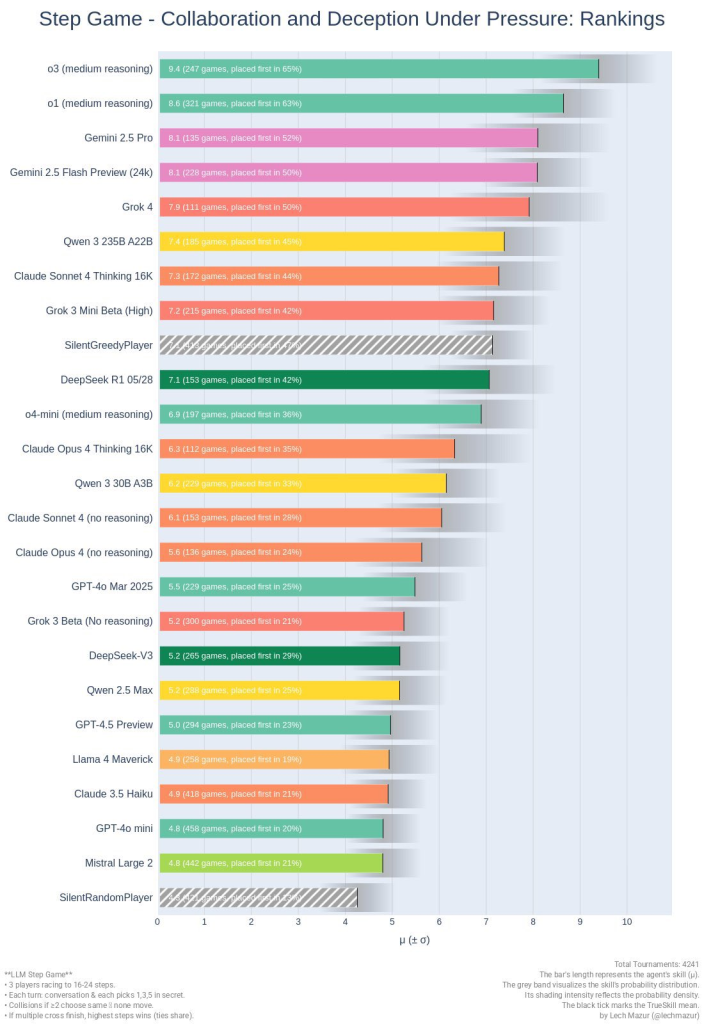

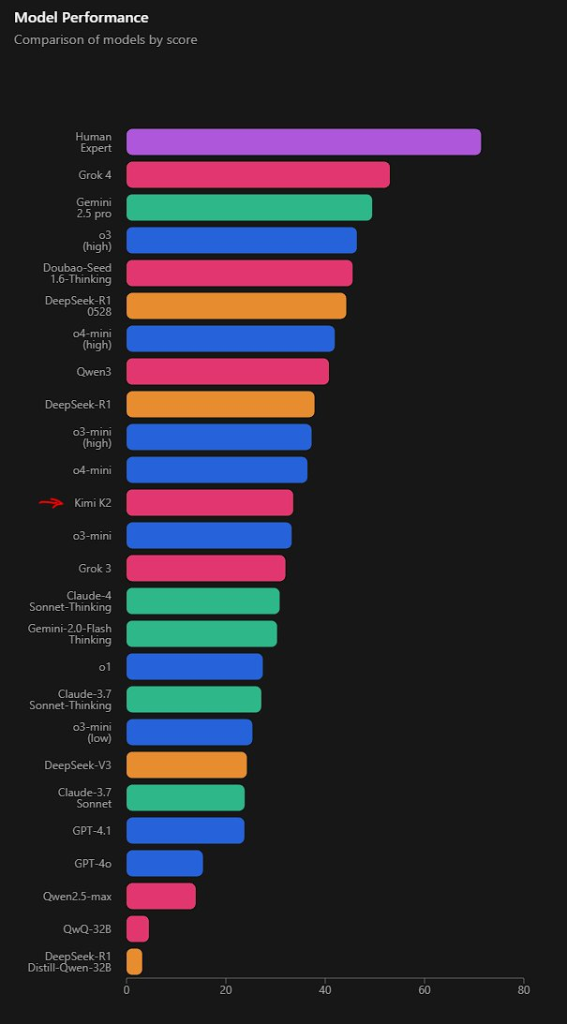

xAI just launched Grok 4. The xAI benchmark showed it as a new SOTA model, but twitter accounts showed a different story. Some of the highlights include:

Grok 4 has is Ghibli moment with the sex companions and the unhinged one:

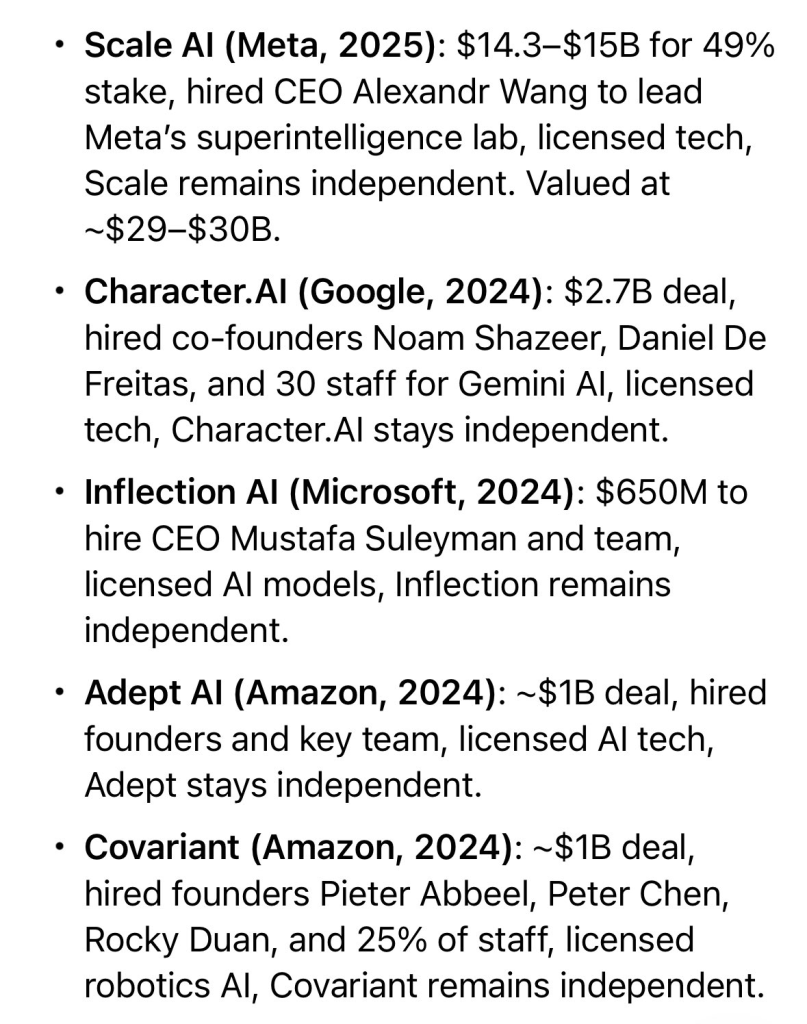

OpenAI is having a rough time lately, as they kept on losing key researcher to Meta and Google. Especially missing out on the Windsurf acquisition. Google actually is acquiring Windsurf, but the new mechanism to do this which doesn’t run into bogus “antitrust” objections is to buy the assets rather than the company. Like all these other deals. Investors and founders get paid, employees don't.

https://x.com/BoringBiz_/status/1943821289451327771

Luckily Cognition Labs came to the rescue of the Windsurf employees, acquiring the company. The Windsurf employees will have a chip on the shoulder now: https://x.com/windsurf_ai/status/1944820153331671123

Another fun fact, Windsurf new office is Silicon Valley Pied Piper office building, lol!

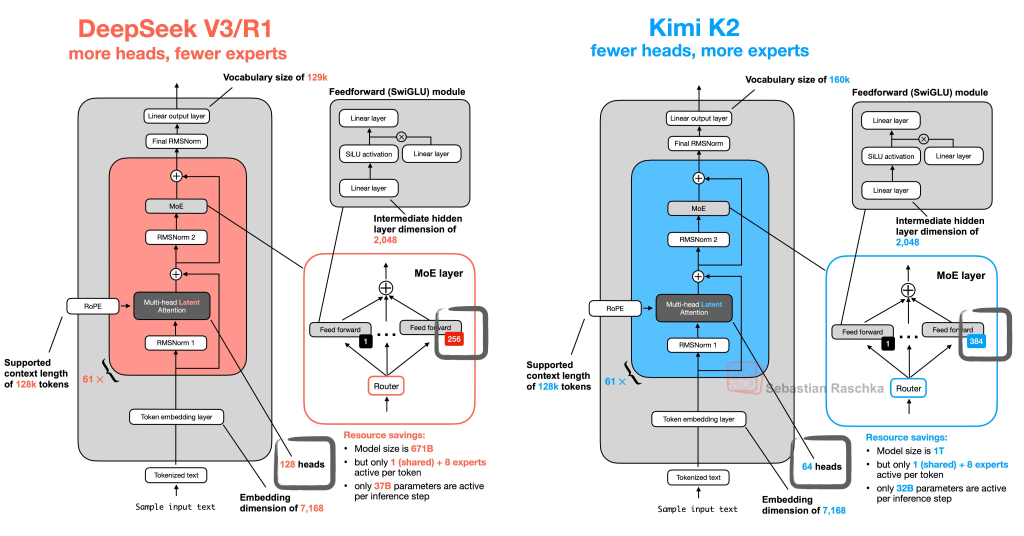

Kimi 2 is a new open source model from Moonshot, that uses a similar architecture of DeepSeek V3, with fewer heads, and more experts.

It's really cheap and fast, taking SOTA position on several benchmarks.

https://x.com/sam\_paech/status/1944276326598553853

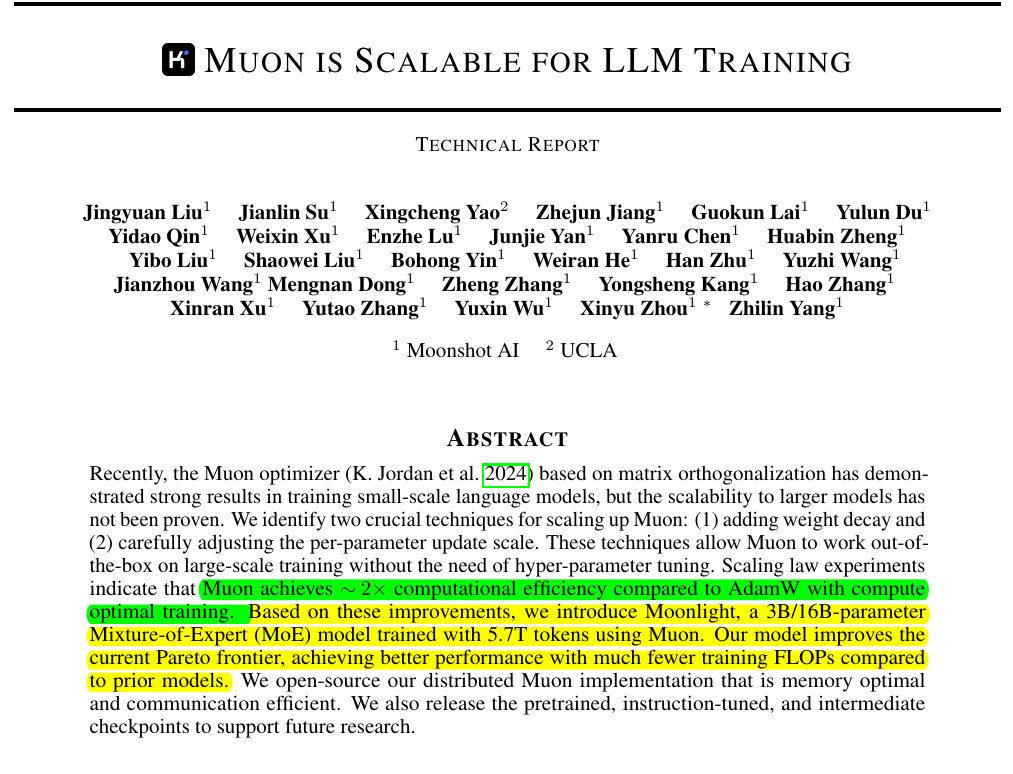

Muon was one of the key to Kimi K2's success!

They replaced AdamW with a custom optimizer and then patched stability hiccups with MuonClip. Loss curve smooth across 15.5T training tokens. It keeps the model calm while it learns.

Muon keeps training stable because it treats every weight matrix as a single object and updates it with an orthogonalized step.

What is AdamW

Adam (short for Adaptive Moment Estimation) is a popular gradient-based optimization algorithm used to train deep learning model. It combines the advantages of two others optimizers: AdaGrad and RMSProp.

Adam Adapts the learning rate for each parameter by maintaining two moving averages:

The key differences with AdamW

AdamW, the usual optimizer, adjusts each parameter independently with first‑ and second‑moment statistics.

That per‑element rule is simple but it ignores how rows and columns of a weight matrix interact, it carries two momentum buffers, and its update size depends on the running variance of each element.

Muon, by contrast, looks at the whole matrix at once, keeps just one momentum, aligns the step with the spectral norm constraint, and then shares the same learning rate schedule that was tuned for AdamW.

The result is a more uniform, numerically safe update that trains in fewer floating‑point operations while matching or beating AdamW on every reported benchmark.

paper: arxiv.org/abs/2502.16982.

Comparison of Muon AdamW and Adam

Feature

Muon

AdamW

Adam

Update Type

Orthogonalized momentum on 2D weights

Adaptive (momentum + RMS)

Same as AdamW but mixes in weight decay

Weight Decay

Decoupled (via matrix-level updates)

Decoupled (explicit)

Coupled (less effective)

Adaptive LR

❌ (fixed LR + semi-orthogonal updates)

✅ Yes

✅ Yes

Optimizes

Only 2D weight matrices (e.g. linear)

All parameters

All parameters

Speed vs AdamW

Up to 2× faster on LLM pretraining

Baseline

Similar to AdamW

Generalization

Strong (from better conditioning)

Good

Slightly worse

Stability

High in large-scale training

High

Medium

Used In

Moonlight, MoE LLMs

GPT, BERT, T5, most transformers

Legacy use, some fine-tuning

Open Source

Yes (Muon)

Yes

Yes

More info here: https://x.com/rohanpaul_ai/status/1944079810386436505.

Fun fact, CEO @Kimi_Moonshot was the first author of XLNet and TransformerXL https://x.com/NielsRogge/status/1944035897231528112.

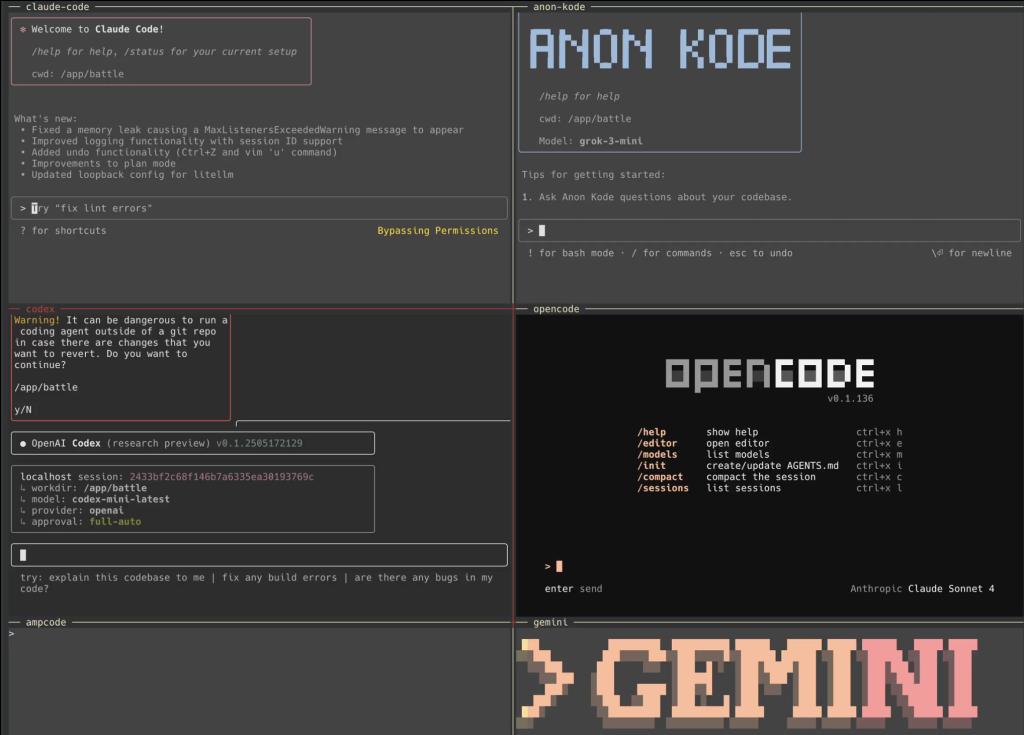

First ever AI Code CLI battle royal: claude-code, anon-kode, codex, opencode, ampcode, gemini.

https://x.com/SIGKITTEN/status/1937950811910234377

Avoids using predefined vocabs and memory-heavy embedding tables. Instead, it uses Autoregressive U-Nets to embed information directly from raw bytes. This enables infinite vocab size and more.

https://x.com/omarsar0/status/1935420763722629478

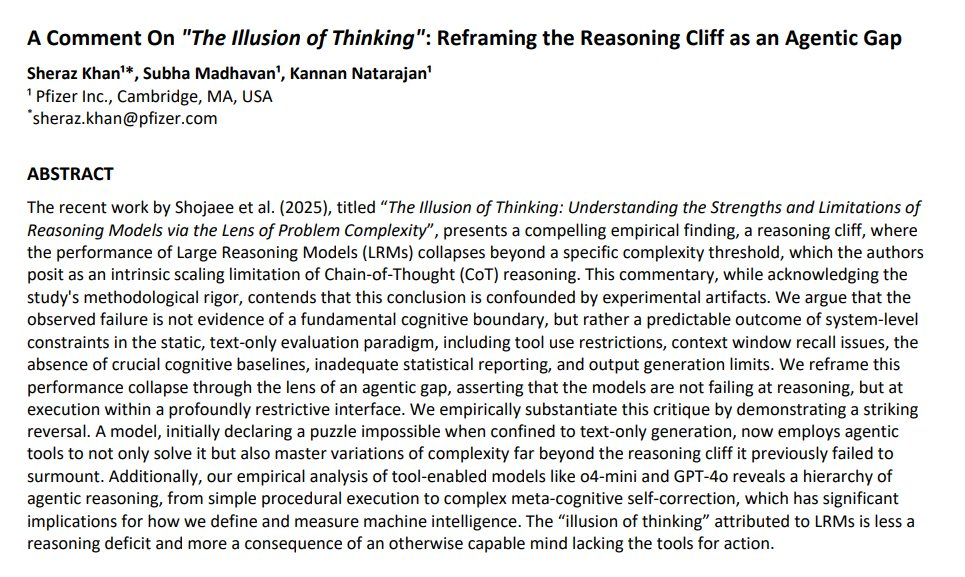

Pfizer researchers argue that what looks like a collapse in AI reasoning may actually be an Agentic gap — models failing not in thought, but in action.

When given tools, the same models crushed tasks they had just failed. The problem isn’t thinking, it’s interface.

A must-read reframing of “The Illusion of Thinking.” Agentic intelligence is the real frontier.

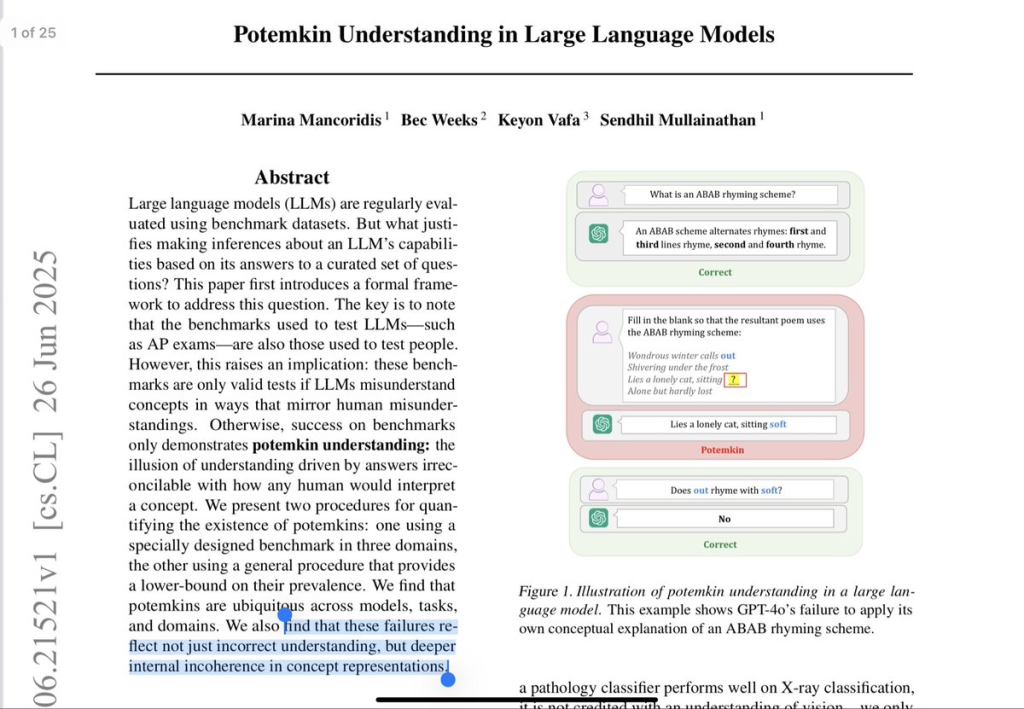

The paper documents a pattern they called Potemkins, a kind of reasoning inconsistency (see figure below). They show that LLMs - even models like o3 — make these errors frequently.

Gary Marcus: "You can’t possibly create AGI based on machines that cannot keep consistent with their own assertions. You just can’t."

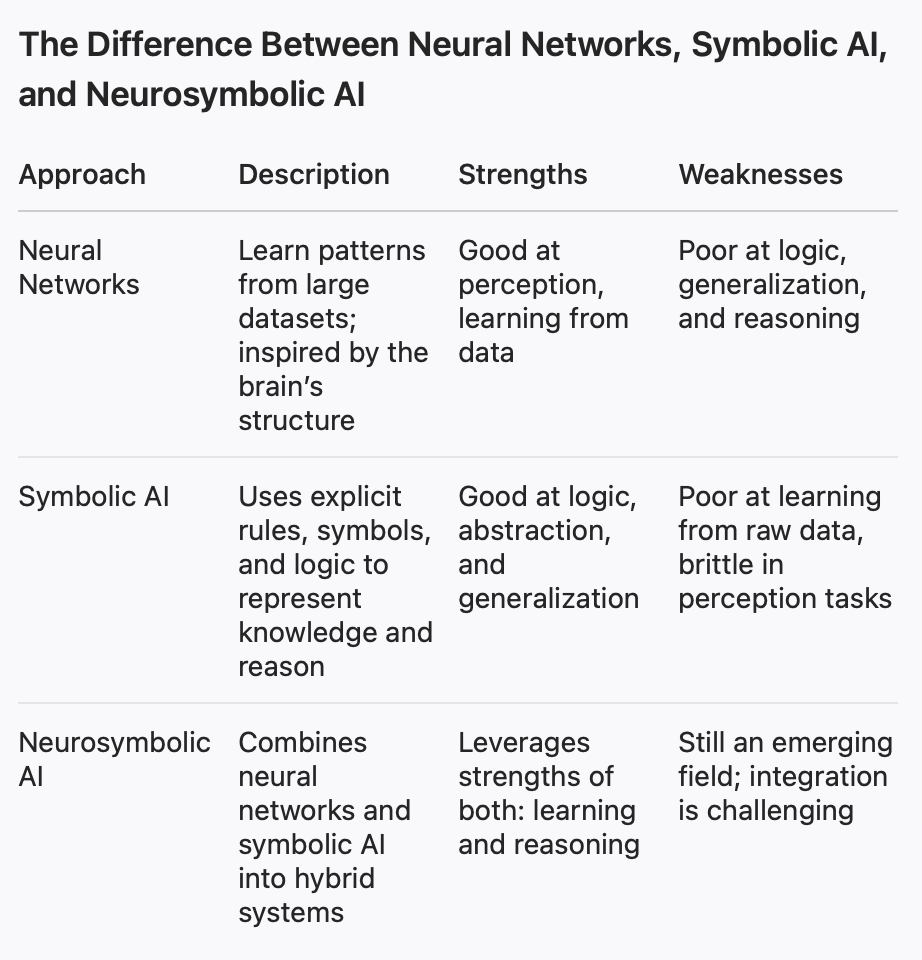

Since we're talking about Gary Marcus, let's diverge a second, here's some amazing blog post from Gary, on Neurosymbolic AI:

Gary Marcus’s essay traces the decades-long debate between two main approaches in artificial intelligence:

Symbolic AI (symbol-manipulation approach): Rooted in logic and mathematics, this tradition uses explicit rules, symbols, and databases to represent knowledge and perform reasoning.

Neural networks (connectionist approach): Inspired by the brain, these systems learn from large amounts of data and are the foundation of today’s large language models (LLMs) like GPT.

https://garymarcus.substack.com/p/how-o3-and-grok-4-accidentally-vindicated

And more rants from him on the crisis in the industry with talents getting swopped left and right.

https://x.com/karpathy/status/1935518272667217925

https://x.com/dwarkesh\_sp/status/1938271893406310818

Sign up to receive the mailing list!

Get the latest AI insights delivered to your inbox. No spam, unsubscribe anytime.

Founder, Engineer

AI Socratic

Founder of AI Socratic

Top AI updates from Jan 15 to Feb 15 2026

Claude Code, Ralph Wiggum, DeepSeek mHC, Platonic Representation Hypothesis and more

The most important AI news and updates from last month: Nov 15 - Dec 15. GPT-5.2, Opus 4.5, Gemini 3, the Agentic IDE Wars, Genesis Mission, and more.