Search across updates, events, members, and blog posts

Updated on Sep 8th

The top AI developments from Jul 15–Sep 15, debated Socratically over AI dinners in NY and SF. This is our 1st draft.

**Note by the Author** This blog post is HAND CRAFTED 🤚. any use of em dash (—) or delve is a conscious decision by the writer (me) to convey something or for pure personal pleasure — I love em dashes, I started using them after reading on writing well by William Zinsser. Enjoy!

Sign up to receive the mailing list!

As mentioned in part 1 of this AI Socratic edition in September we're organizing 2 AI dinners, hope to see you there:

Sep 10th

Solana Skyline, NYC

Link: https://lu.ma/ai-dinner-13.0

Sep 17th

Frontier Tower, SF

Link: https://lu.ma/ai-dinner-13.2

Nov 20 - 22, 2025

New York City

We totally recommend this event. Currently working on getting a group discount for our community and a discount code for our readers. In the meantime if money are not a problem for you, go ahead and sign up, it's one of the best AI event.

Tempo is a new blockchain specifically designed for stablecoin payments. It's launched by Stripe and developed by Paradigm, a well known crypto developer lab.

Tempo is designed to enable global payments, payroll, remittances, tokenized deposits, microtransactions, and ✨ A2A payments ✨.

Stablecoins are a big deal because they enable to fortify and distribute the USD without the need of intermediary. The new US regulation in fact are not only friendly to stablecoins but also pushing for it. Recently Paolo Ardoino, founder of Tether a stablecoin with $170B in market cap, met with the POTUS to discuss exactly this.

Salesforce, Amazon and many more predict that the agent economy will surpass the SaaS economy by 2030, which means autonomous agents will pay for services via cryptocurrency rails.

Tempo is designed for both humans and agents.

Interestingly in 2019 Zuck/FB tried launching a similar cryptocurrency called Libra then renamed Diem — I wrote about Libra when it came out: Analysis of Libra part 1 and part 2. Libra failed because of the strong regulations, but clearly today we live in different times.

Even the GTM strategy is very similar, capture as many strong partnership as possible to increase distribution.

I'll try to write an in depth analysis about Tempo since it touches my 2 most interesting topics: cryptocurrency and AI agents.

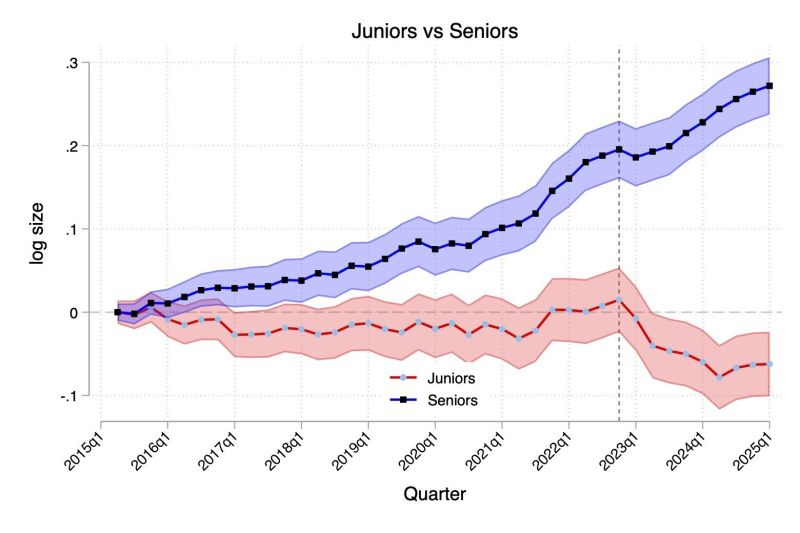

An Harvard study tracked 285,000 firms and 62 million, from 2015 to 2025 workers concluding that 1 senior + 3 juniors is now equivalent to 1 senior + Claude. The effect is strongest in wholesale and retail sectors, where junior hiring fell by about 40%.

This shift may have lasting effects on career mobility and wage inequality.

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=5425555

https://x.com/kyle/status/1963368557347311929

https://x.com/wolflovesmelon/status/1963736263246389415

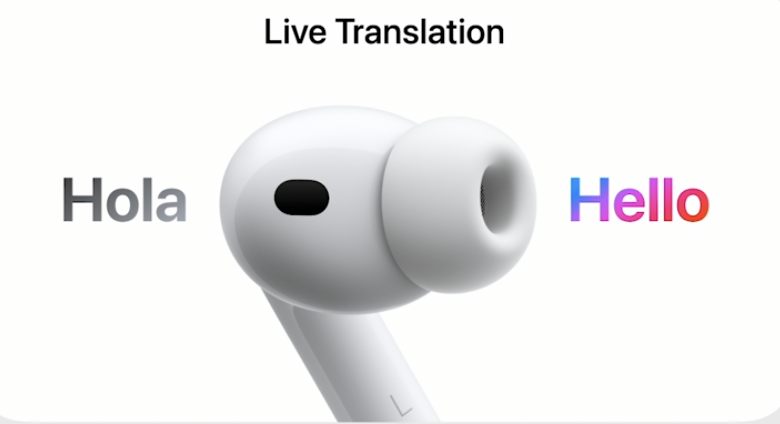

This new launch might single handedly put Apple back in the AI game. From the demo at the Apple Event it looks very simple, if you talk to someone foreigner and set the translation, the airpods dims the other person voice while you ear the translation. Simple and effective.

September is the beginning of fundraising season. While Devin was not as good a year ago, it was the first semi-autonomous coding agent, like Codex or Claude Code, that integrate with Github and your company tools, and AFAIK is still the only one that has a browser mode to test the changes it implements. So, congrats to Cognition for the successful raise. https://x.com/cognition/status/1965086655821525280

This Series C funding round, led by @ASMLcompany, fuels Mistral AI scientific research to keep pushing the frontier of AI to tackle the most critical technological challenges faced by strategic industries.

https://x.com/MistralAI/status/1965311339368444003

Great content 🤩 video with amazing visuals. This video uses the sliding block puzzle Klotski to explain state spaces in CS. Each puzzle move maps onto a graph, showing how empty spaces and simple rules create complex, chaotic structures. It highlights how CS provides “x-ray vision” into hidden patterns in life.

https://www.youtube.com/watch?v=YGLNyHd2w10&themeRefresh=1

Dwarkesh thinks AGI is further than we think because, as R. Sutton said, AI utility is very strongly dependent on its learning. LLMs today don't have continuous learning, AI unlike humans, can't learn and improve while employed on a job. Also compute scale will hit a wall, and new breakthrough will be necessary. Short video but to the point!

https://www.youtube.com/watch?si=D2v2ItV\_2K0N0IMA&t=90&v=nyvmYnz6EAg&feature=youtu.be

Get the latest AI insights delivered to your inbox. No spam, unsubscribe anytime.

Founder, Engineer

AI Socratic

Founder of AI Socratic

Top AI updates from Jan 15 to Feb 15 2026

Claude Code, Ralph Wiggum, DeepSeek mHC, Platonic Representation Hypothesis and more

The most important AI news and updates from last month: Nov 15 - Dec 15. GPT-5.2, Opus 4.5, Gemini 3, the Agentic IDE Wars, Genesis Mission, and more.