Search across updates, events, members, and blog posts

The most important AI news and updates from last month: May 15 - June 15.

Sign up to receive the mailing list!

The next AI dinner will be on June 18th, and it will be hosted in the Arize.ai office.

We'll discuss the top news and updates from this blog post using the Socratic methodolgy. As well as going through few presentations:

Kerem Kazan will present "Commenting on chess moves in natural language (training LLM via RL to play chess)".

Jianing Qi will present the paper Learning to Reason Across Parallel Samples for LLM Reasoning. Do RL reasoning on top of the model outputs with a tiny LLM. Is RL optimizing output distribution or learning new abilities?

Despite numerous snafus—such as poor to nonexistent internet, big screens malfunctioning, unclear ticket access, general organizational issues, insufficient food for attendees, and high prices, and most speakers were primarily promoting their products. Still, this conference turned out to be one of my favorite events of the year and attracted an incredibly high level of talent.

Jory Pestorious wrote this fantastic summary of the top insights from the ai engineer conference:

Link: http://jorypestorious.com/blog/ai-engineer-spec.

Another interesting report comes from Thomas Gear:

Link: https://x.com/tg\_bytes/status/1931938102861271042

Here’s the recording of the general track. All the advanced talks in the RL track were just incredible and made the conference worth it.

https://www.youtube.com/watch?v=z4zXicOAF28&t=18946s

The highlight of the tech talk for me was Dan Han from Unsloth — a RL beast! https://x.com/danielhanchen/status/1930752903960211608.

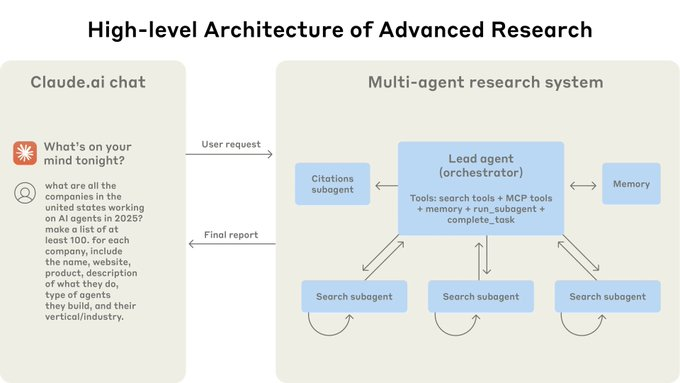

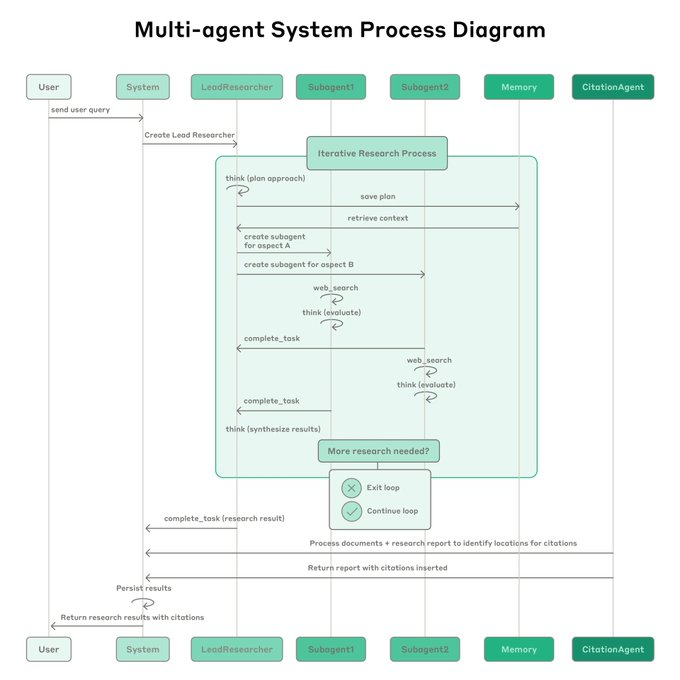

Anthropic shares how they built Claude's new multi-agent Research feature, an architecture where a lead Claude agent spawns and coordinates subagents to explore complex queries in parallel. They use this orchestrator-worker architecture:

Traditional approaches using Retrieval Augmented Generation (RAG) use static retrieval. That is, they fetch some set of chunks that are most similar to an input query and use these chunks to generate a response. Anthropic Advanced Research architecture uses a multi-step search that dynamically finds relevant information in parallel, adapts to new findings, and analyzes results to formulate high-quality answers.

Token-efficient Scaling Performance gains correlate strongly with token usage and parallel tool calls. By distributing work across multiple agents and context windows, Claude’s system scales reasoning capacity efficiently. However, this comes with a 15× token cost over standard chats, making it suitable for high-value queries only.

Flexible Evaluation + Production Reliability Anthropic uses LLM-as-judge scoring with rubrics for factuality, citation, and efficiency, alongside human testing to catch subtle failures. For reliability, they built resumable stateful agents with checkpointing, rainbow deployments, and full observability of agent decision traces, crucial for debugging non-deterministic, long-running agents.

Blog: https://anthropic.com/engineering/built-multi-agent-research-system

Tweet: https://x.com/omarsar0/status/1933941545675206936.

https://x.com/swyx/status/1933981734456230190

AI coding tooling & coding agents being packaged into products, and even worse, cloud products, is the wrong path. Command Line is the way!

Tutorial on how to use it: https://x.com/rasmickyy/status/1931078993022730248

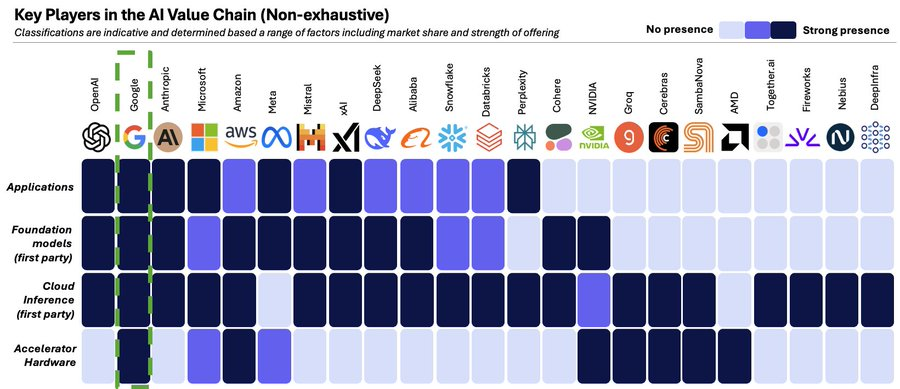

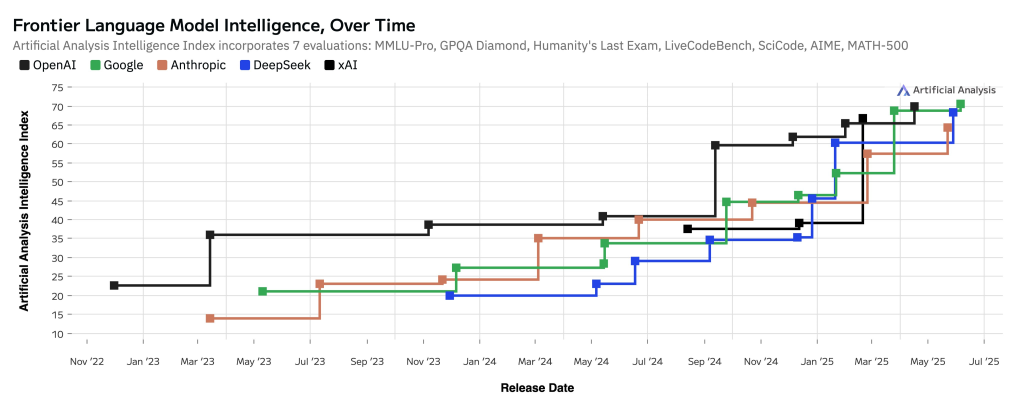

Currently Google is the best vertically integrated company. Google simply does not get enough credit for the TPU, as one of the reason to be one of the top player in AI.

https://x.com/ArtificialAnlys/status/1933254125757870104

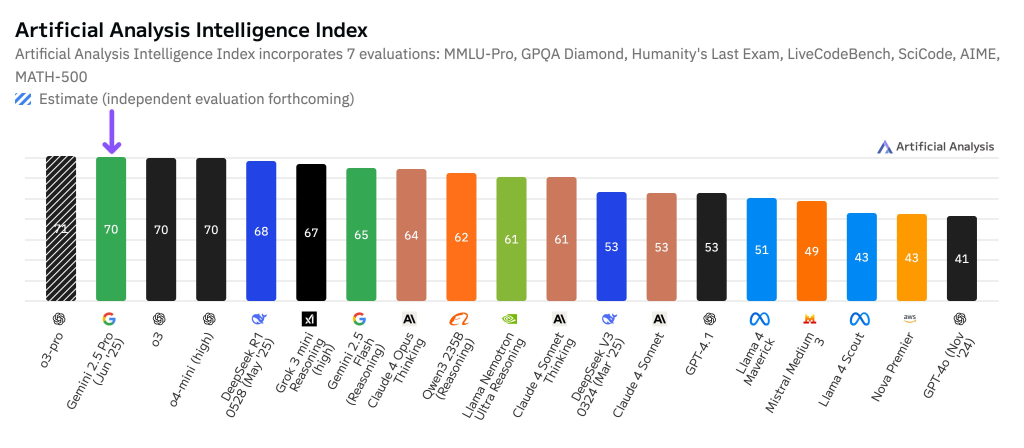

With the launch of Gemini 2.5 Pro they're now only second to OpenAI o3-pro.

Google have been constantly shipping

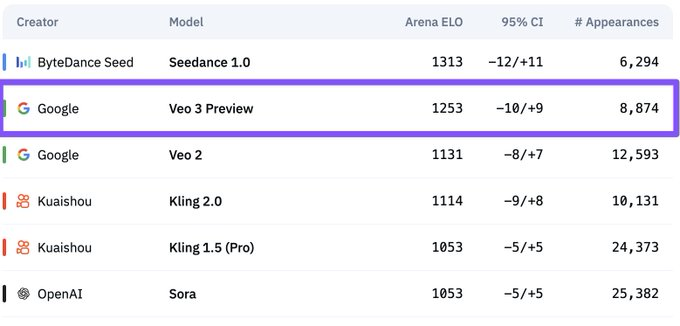

Veo3 is now positioned #2 in the best video models

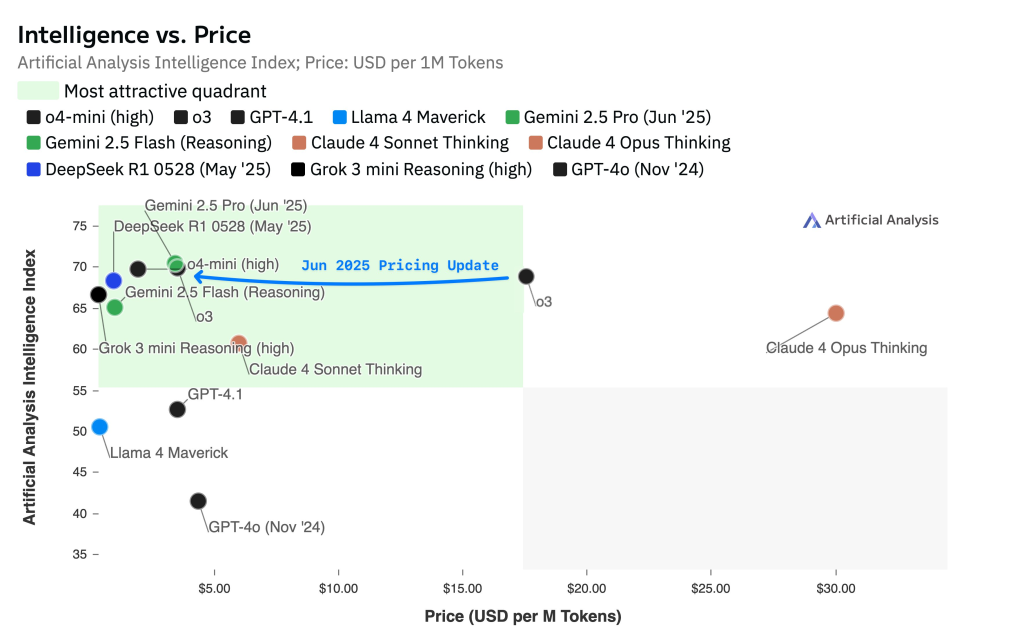

o3 pro performs just like or better than o3 in most benchmarks including ARC1 and ARC2. What's incredible about it, is the cut in cost by 80%, basically costing as much as 4o-mini!

https://x.com/ArtificialAnlys/status/1932489573462081898

Twitter did what Twitter does, speculating on o3 using distillation, but some insider says that OpenAI have been using Codex internally to optimize the heck out of it, obtaining the incredible 80% without performance losses.

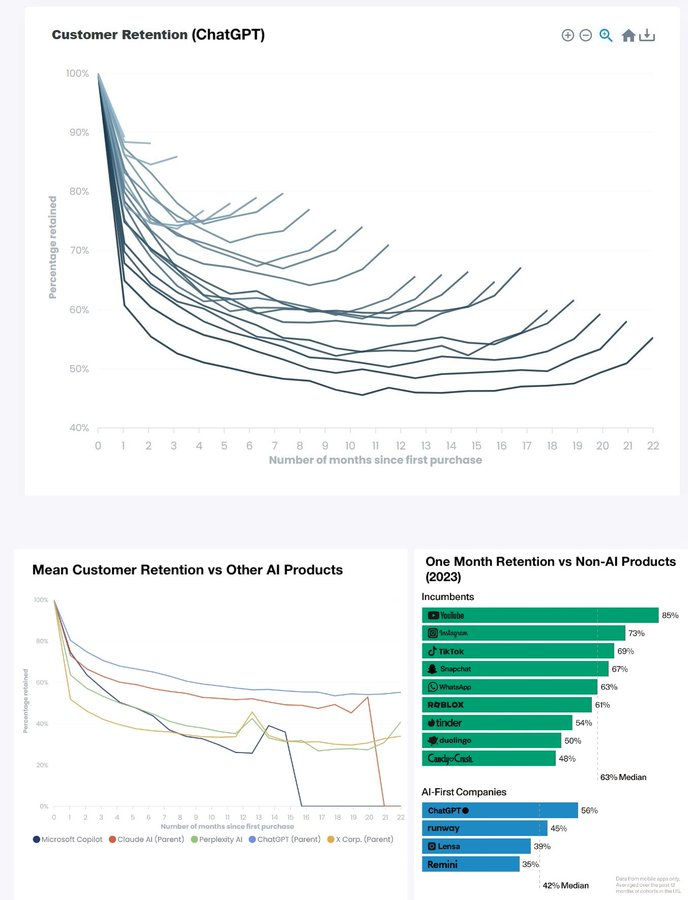

OpenAI retention curve is a wet dream for most investors. Their 1 month retention has skyrocketed from <60% 2yrs ago to an unprecedented ~90%! Youtube was best-in-class with ~85%. 6mo retention is trending to ~80%. Rapidly rising smile curve.

https://x.com/deedydas/status/1932619060057084193

After the acquisition Alexandr will take the role of head of AI at Meta. Some drama is already unraveling saying he's toxic and only great at fundraising. We shall see.

Meta is currently offering $2M+/yr in offers for AI talent and still losing them to OpenAI and Anthropic (who has 80% retention rate). Source.

The Mistral team at it again with Magistral! A reasoning model designed to excel in domain-specific, transparent, and multilingual reasoning.

GRPO with edits:

1. Removed KL Divergence

2. Normalize by total length (Dr. GRPO style)

3. Minibatch normalization for advantages

4. Relaxing trust region

https://arxiv.org/pdf/2506.10910

Simon Wilson: all LLM API vendors are converging to the same product:

How do you define intelligence? How do you define Life?

https://x.com/blaiseaguera/status/1924514755982606493

https://x.com/reedbndr/status/1927495304380559744

"We are past the event horizon" this phrase might go into our future history books.

The gentle singularity is a short read, jam packed with philosophical takes and with some fun fact: ChatGPT queries uses 0.34 watt-hours (few minutes of a normal lightbulb) and a tea spoon of water.

Dia Browser is out, go get it: diabrowser.com.

This research paper from Apple is been quite controversial for several reasons, first of which, Apple lagging behind the AI race: https://machinelearning.apple.com/research/illusion-of-thinking.

Are reasoning models like o1/o3, DeepSeek-R1, and Claude 3.7 Sonnet really “thinking”? Or are they just throwing more compute towards pattern matching?

Apple designed an experiment using Tower of Hanoi to test these models. Well turns out, it was a memory problem, the models were failing because they were going out of context.

Asking the model to be more concise in fact enabled o3 to solve the Tower of Hanoi, as shown in the paper of The Illusion of the Illusion of Thinking — this paper also sign as the first time an LLM, Claude Opus, is listed as an author on arXiv.

https://x.com/rohanpaul_ai/status/1933296859730301353

LLMs are not the only one faking it either: The Illusion Of Human Thinking.

More papers

We introduce MEMOIR — a scalable framework for lifelong model editing that reliably rewrites thousands of facts sequentially using a residual memory module.

https://x.com/qinym710/status/1933514852313563228

What if an LLM could update its own weights? Meet SEAL: a framework where LLMs generate their own training data (self-edits) to update their weights in response to new inputs. Self-editing is learned via RL, using the updated model’s downstream performance as reward.

https://x.com/jyo_pari/status/1933350025284702697.

🔥 “Reasoning” features learnt by an SAEs (Sparce Auto Encoders) can be transferred as is across MODELS and datasets is super cool and similar in spirit to Mistral’s finding that there exists a low dim reasoning direction https://x.com/nrehiew_/status/1933308951334170712

🔥 SakanaAILabs: We’re excited to introduce Text-to-LoRA: a Hypernetwork that generates task-specific LLM adapters (LoRAs) based on a text description of the task. Catch our presentation at #ICML2025! https://x.com/SakanaAILabs/status/1932972420522230214

🔥 deedydas: DeepSeek just dropped the single best end-to-end paper on large model training. https://x.com/deedydas/status/1924512147947848039

🔥 deedydas: The BEST AI report in the world just dropped and I read all 340 pages so you don’t have to. https://x.com/deedydas/status/1929381310856151280

Terence Tao talks about the beauty of the hard math formulas of the century and how AI will help us solve them.

https://www.youtube.com/watch?v=HUkBz-cdB-k

https://x.com/PJaccetturo/status/1932893260399456513

https://x.com/ROHKI/status/1931081752992477285

Get the latest AI insights delivered to your inbox. No spam, unsubscribe anytime.

Founder, Engineer

AI Socratic

Founder of AI Socratic

Top AI updates from Jan 15 to Feb 15 2026

Claude Code, Ralph Wiggum, DeepSeek mHC, Platonic Representation Hypothesis and more

The most important AI news and updates from last month: Nov 15 - Dec 15. GPT-5.2, Opus 4.5, Gemini 3, the Agentic IDE Wars, Genesis Mission, and more.