Search across updates, events, members, and blog posts

All the most important AI news and updates from last month (Feb 20 - Mar 15).

The AI Dinner is a recurring event we run with the AI NYC. This month it will be on Mar 19th, and it will be focused on AI engineering, and more specifically on MCP, here's the link https://lu.ma/mcp-study-group!

It’s clear that AI is made out of hype cycles, and this time it’s MCP taking the stage. The Model Context Protocol (MCP) is a server/client protocol proposed by Anthropic to integrate large language models (LLMs) with external applications.

The adoption has started to pick up, with many big players jumping on board, including Perplexity AI.

The simplest way to understand MCP is to build a simple one—it takes 15 minutes at most, follow this guide or ask Claude to write it for you.

One of the most interesting project in the MCP world is OpenTools a registry to search MCPs.

Codename Aurora 🛸 🌌

On March 10, 2025, Anthropic introduced Claude Sonnet 3.7, dubbed "Aurora," marking it as their most sophisticated model yet. Designed with a focus on seamless human-like interaction, enhanced interpretability, and superior safety features, Anthropic continues to push boundaries in AI development. Alongside the release, they published a Transparency Report detailing safety protocols, performance metrics, and training insights.

Here are the highlights of Claude Sonnet 3.7:

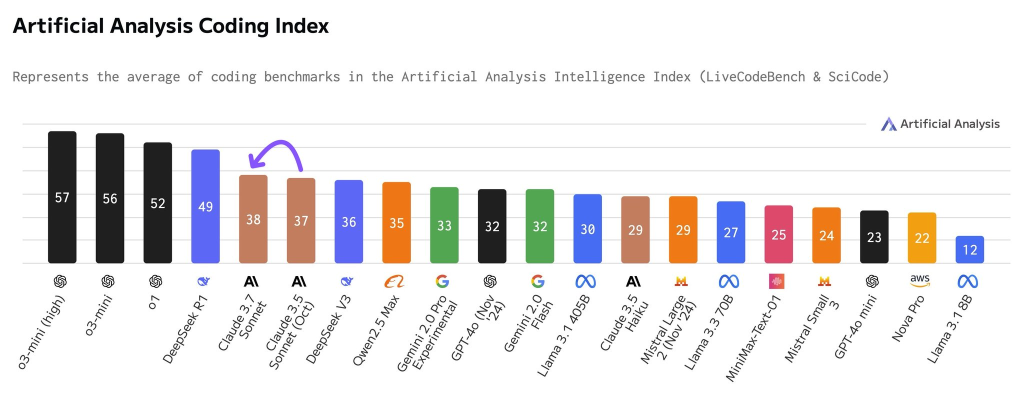

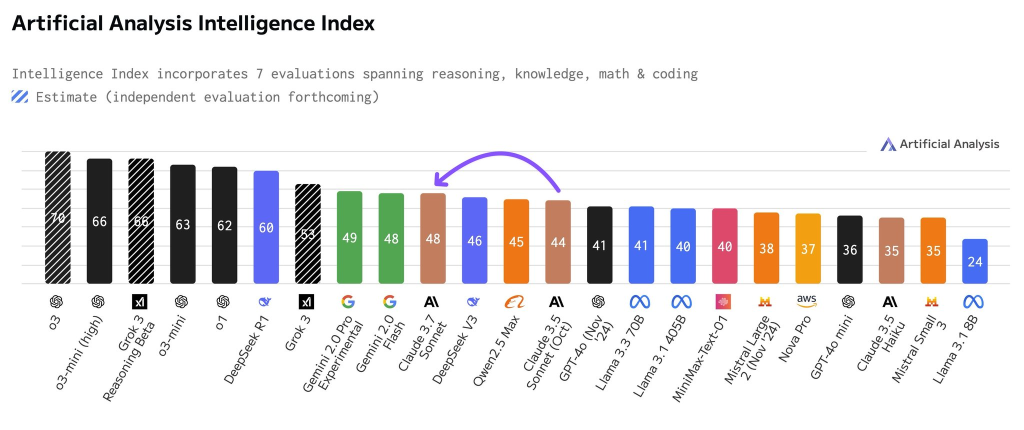

Performance: Excels in conversational fluency with a ChatEval score of 0.85 and cross-lingual understanding with an XGLUE score of 0.88, alongside a notably low error rate of 0.15 in sensitive contexts. On X many users have been posting imprevessive coding challenges, marking Sonnet yet the favorite coding LLM.

New Base Model: Acts as the foundation for Anthropic’s next-generation interpretive systems, emphasizing intuitive and safe responses.

Limitations: Performs strongly in dialogue and ethics-driven tasks but may lag in highly abstract or mathematical reasoning compared to specialized competitors, evidenced by third-party comparisons showing OpenAI’s lead in complex math.

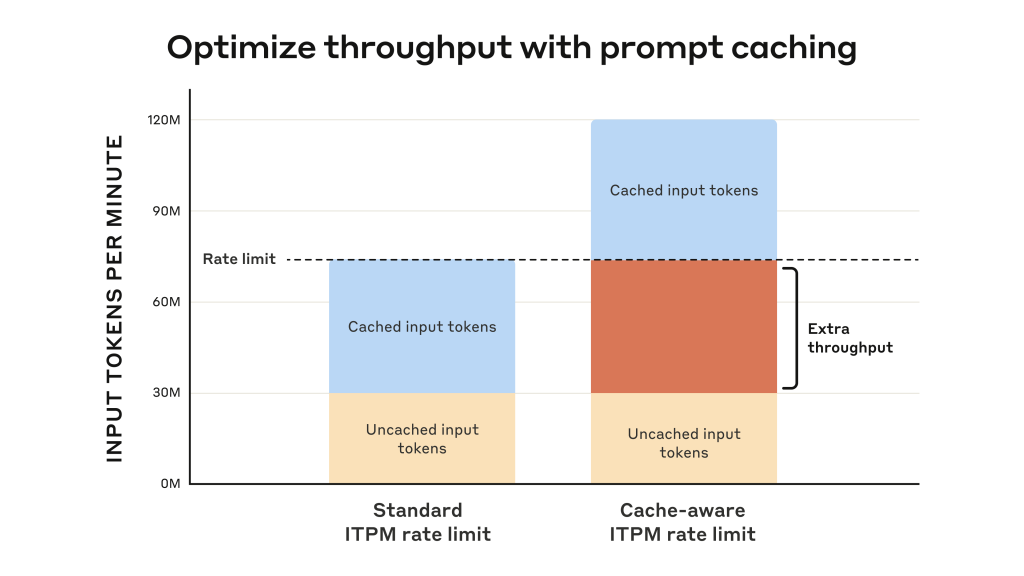

This is not the only update from Anthropic, they also just improved their API via prompt caching, reducing the amount of tokens and therefore inference cost 🔥!

Codename Orion 🚀 🌌

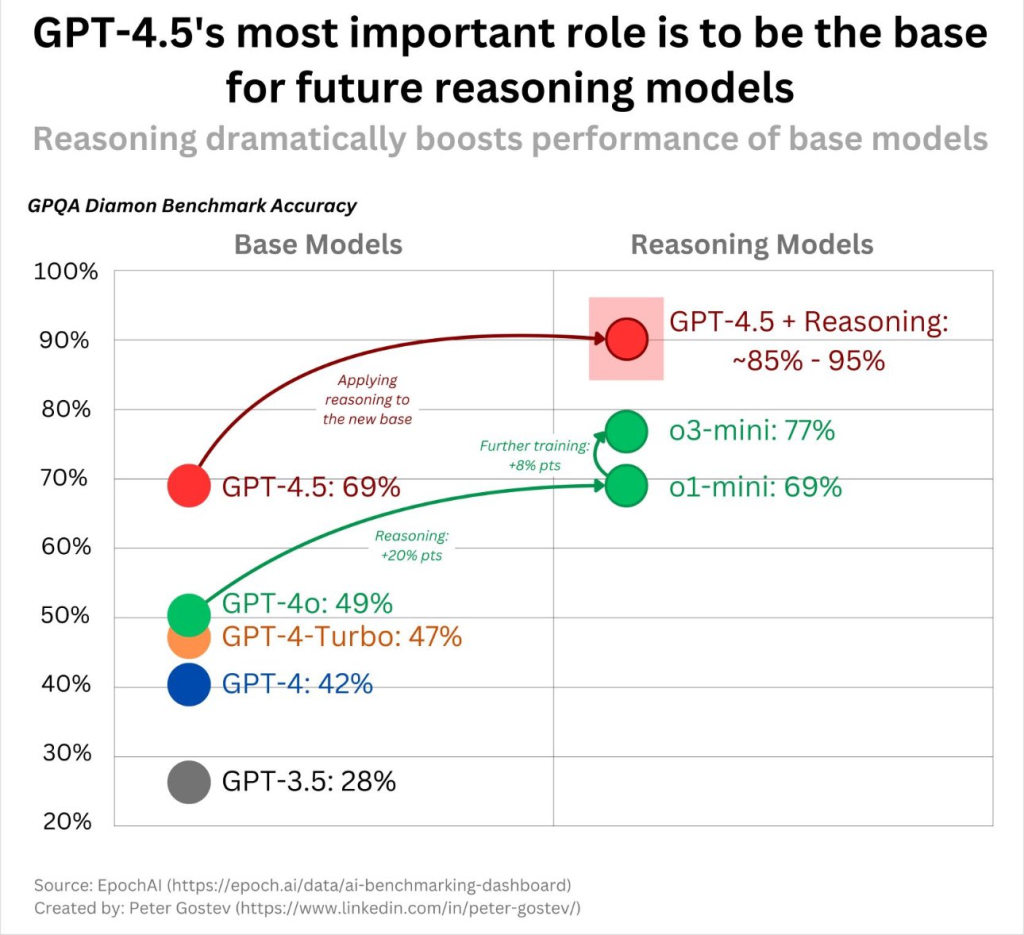

On February 27, 2025, OpenAI launched GPT-4.5, codenamed "Orion," as its most advanced model to date. GPT-4.5 focuses on natural conversation, reduced hallucinations, and improved general-purpose capabilities. OpenAI started using a System Card to share details including safety, benchmark, and training strategy.

Here are the highlights of GPT 4.5:

Performance: Excels in factual accuracy (PersonQA: 0.78) and multilingual tasks (MMLU English: 0.896), with a lower hallucination rate (0.19), helicone.ai.

New Base Model: Replaces previous models as the core for OpenAI’s reasoning systems, emphasizing natural dialogue.

Limitations: Strong in practical tasks but lags in complex reasoning compared to specialized models (vellum.ai)

Rollout: The rollout was staggered due to GPU shortages, with plans to expand access to other tiers.

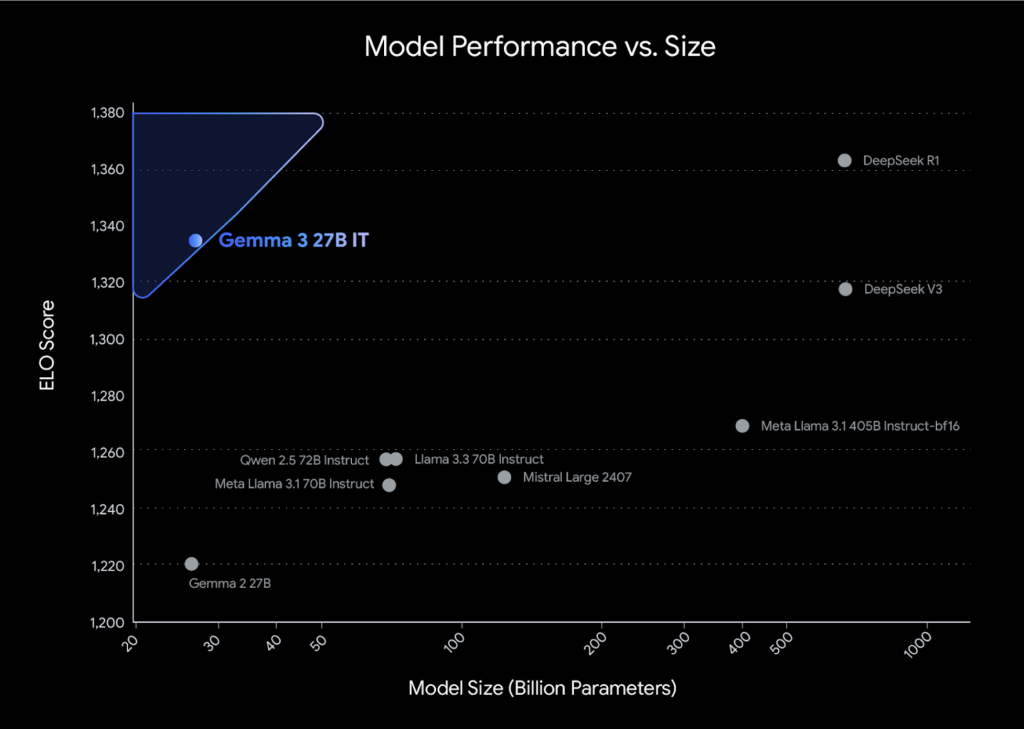

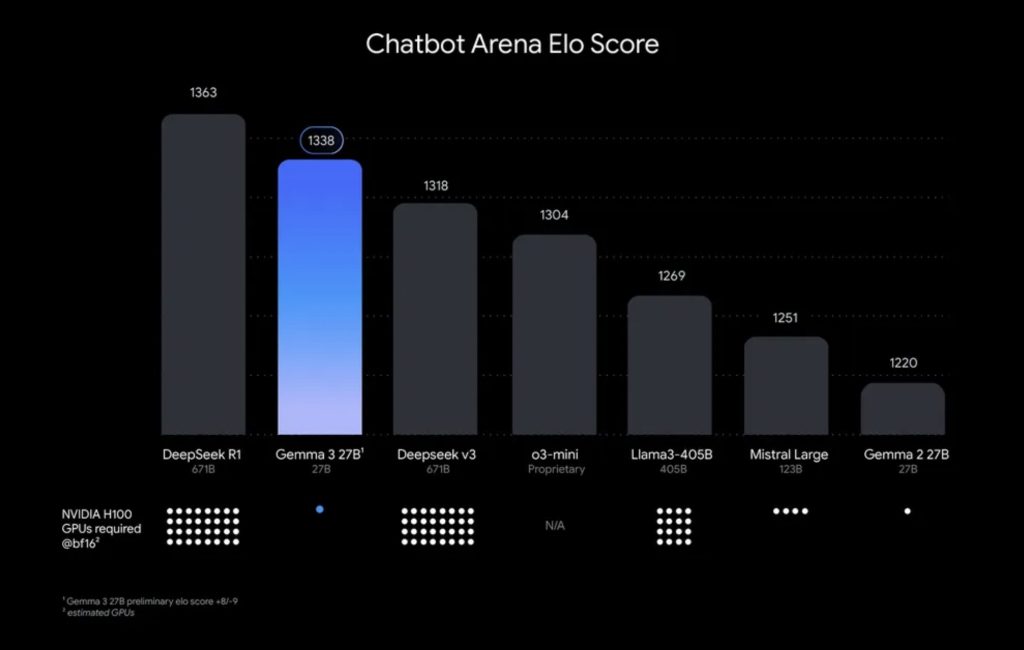

Google has been on a role! Releasing image generation for Gemini Flash 2.0 and releasing Gemma 3 27B, the new king in the small model ELO arena.

Launched March 12, 2025, Gemini 2.0 Flash by Google introduces native image generation within the model. It enables fast, context-aware image creation and editing, with a strong text rendering and storytelling consistency. We played with it, is quite fast and consistent, although in our experience the quality of the image is relatively low.

Released the same day, Gemma 3 27B is Google’s largest open-source model, built from Gemini research. With 27 billion parameters, it handles text and image inputs, supports a 128K token context, and excels in multilingual tasks and reasoning. Trained on 14T tokens, it runs efficiently on a single GPU and pairs with ShieldGemma 2 for safety. It’s ideal for developers seeking customizable, high-performance AI.

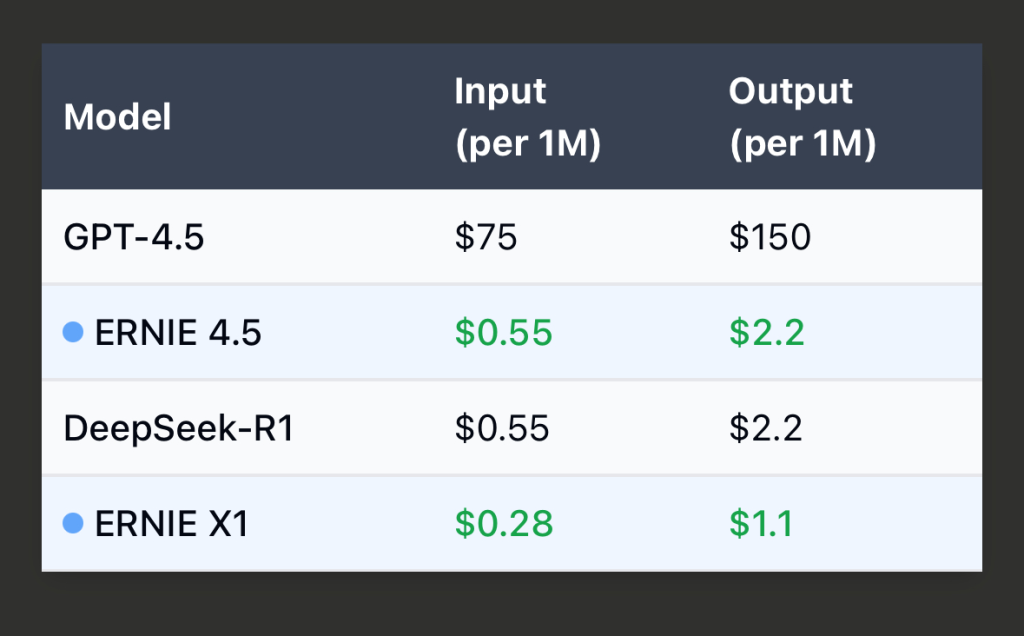

Another Small Language Model from the Chinese 🇨🇳 Baidu. This model is a DeepSeek R1 level but at half the cost.

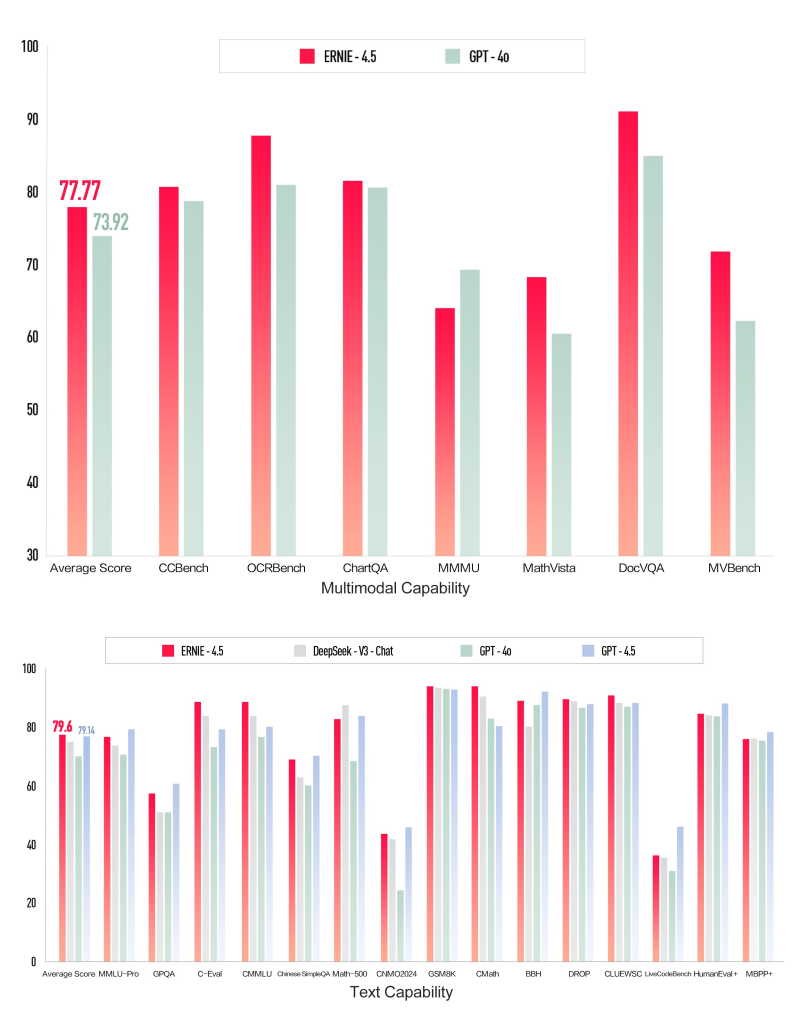

The benchmark reported by Baidu shows that Ernie-4.5 beats 4o in multimodal capability and in some benchmark even gpt-4.5 in text capability — models today are getting overfitted for winning benchmark, running this model by hand generally shows a different story, and well, you can try it yourself here https://yiyan.baidu.com/ (just be aware that your data is getting collected).

Meet Manus, the AI agent from China’s 🇨🇳 Butterfly Effect. Dubbed a 'DeepSeek moment,' it’s a fully autonomous tool that tackles real-world tasks, like building websites, analyzing stocks, and planning trips.

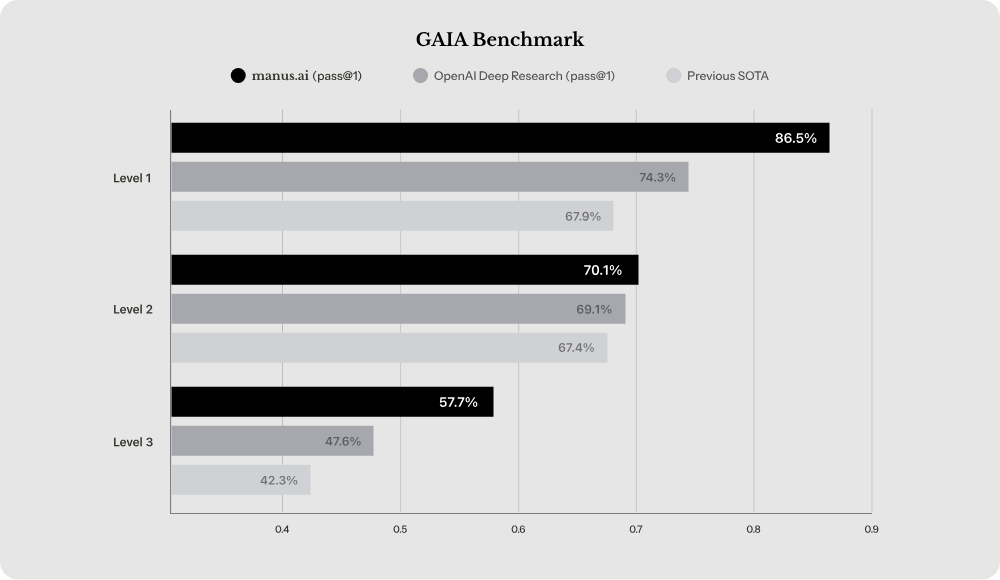

Powered by Claude 3.5 and Qwen, it got some attention on twitter. The GAIA benchmark shows some edge over OpenAI’s Deep Research.

We believe what's more important about this launch is that Chinese research labs are clearly here to innovate and lead, not just follow the US labs.

We tried copy flowai.xyz but it had no success. Other website and demos are looking good thought, and people are already freaking out, searching solution to avoid AI copying their work.

How to defend from AI scrapers? Just add a hidden system prompt in your HTML, https://x.com/Erwin_AI/status/1900052620758467059.

https://x.com/aidenybai/status/1899840110449111416

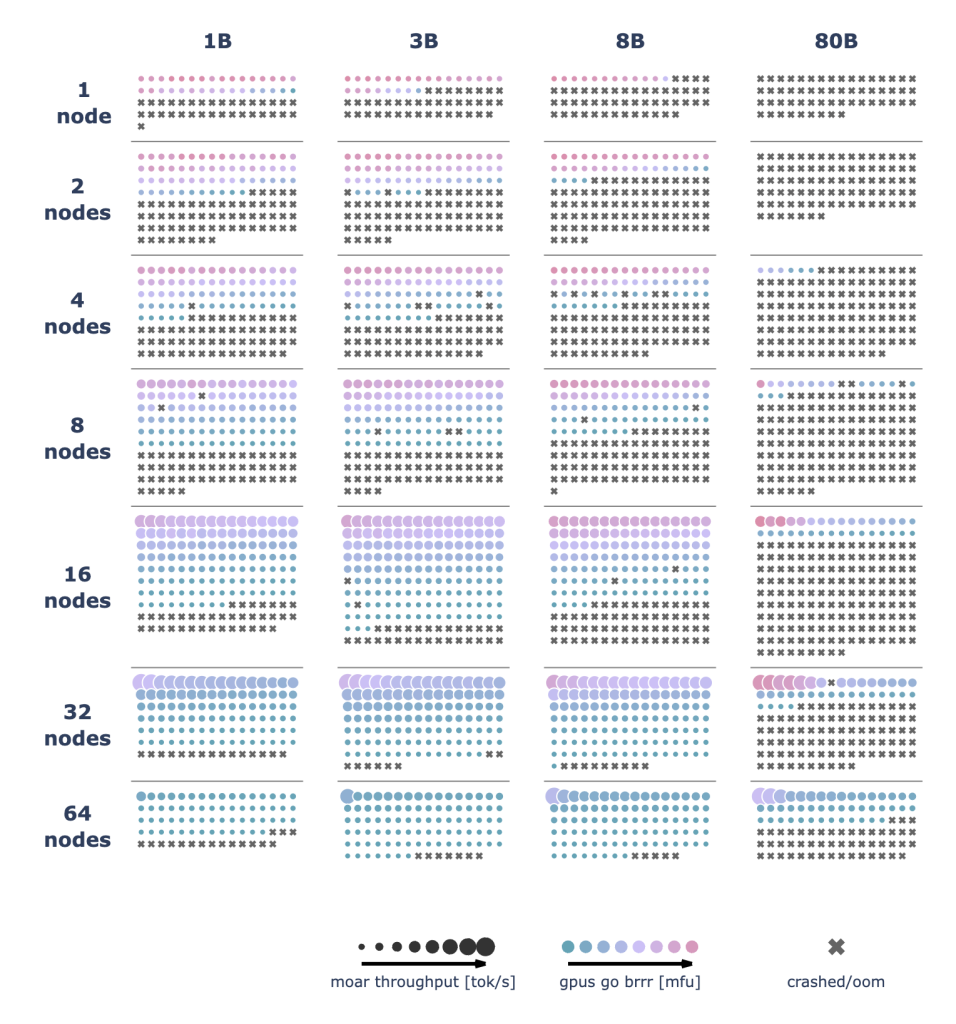

The "Ultra-Scale Playbook," hosted on Hugging Face by Nanotron (a library for pretraining transformer models), is a comprehensive, open-source guide focused on training LLMs on large scale GPU clusters.

It serves as an educational resource for understanding and implementing advanced techniques to optimize LLM training at scale. This doc covers key concepts such as:

5D parallelism: A framework combining data, tensor, pipeline, sequence, and zero-redundancy parallelism.

The ZeRO optimization technique: Designed to reduce memory redundancy and maximize GPU utilization.

Fast CUDA kernels for efficient computation, and strategies for overlapping compute and communication to address scaling bottlenecks.

It integrates theoretical explanations with practical insights, supported by interactive plots, over 4,000 scaling experiments, and audio summaries.

Aimed at both beginners and experts, it provides tools, code examples, and detailed discussions on GPU memory optimization and distributed training, making it a valuable resource for those looking to train massive AI models efficiently.

Saying that is fantastic is reductive, trust me!

https://huggingface.co/spaces/nanotron/ultrascale-playbook

This month we found many interesting videos across multiple topics, including philosophy, which in the field of AI is having a renaissance.

This is a MUST watch for everyone who's learning LLMs. Adreji Karpathy explain LLMs from pre-training all the way to inference.

https://www.youtube.com/watch?v=7xTGNNLPyMI

ML Street Talk, is one of my new favorite AI podcast, incredible topic quality and guests.

Federico Barbero discusses why transformers struggle with tasks like counting and copying long text due to architectural bottlenecks and limitations in maintaining information fidelity. He draws comparisons to over-squashing in graph neural networks and highlights the role of the softmax function in these challenges, while also proposing practical modifications to improve transformer performance.

https://www.youtube.com/watch?v=FAspMnu4Rt0

We just discovered this incredible podcast series by Curt Jaimungal, focused on theoretical physics, consciousness, AI, and God. In this episode Matthew Segall discuss the limits of current reality views, compare them to the outdated Ptolemaic model, and suggest that embracing mortality through introspection can deepen our understanding of existence.

https://youtu.be/DeTm4fSXpbM?si=ln2F4JBONSADK2kQ

Get the latest AI insights delivered to your inbox. No spam, unsubscribe anytime.

Founder, Engineer

AI Socratic

Founder of AI Socratic

Top AI updates from Jan 15 to Feb 15 2026

Claude Code, Ralph Wiggum, DeepSeek mHC, Platonic Representation Hypothesis and more

The most important AI news and updates from last month: Nov 15 - Dec 15. GPT-5.2, Opus 4.5, Gemini 3, the Agentic IDE Wars, Genesis Mission, and more.