Search across updates, events, members, and blog posts

The most important AI news and updates from last month: Oct 15 – Nov 15.

Sign up to receive the mailing list!

Let's start with announcing a new chapter for the AI NYC: AI Builders Milan. Roberto Stagi is taking-on the leadership to organize the AI aperitivi. We're super excited about this!

AI Aperitivo 1.0

Milano

Tuesday, November 18

AI Builders Milan is hosting the first AI Aperitivo 🍸🍷🫒🧀 bringing together Milan's top AI engineers, researchers, and founders for an evening of Socratic dialogues.

Event: AI Aperitivo 1.0

AI Dinner 15.0

New York

Wednesday, November 12

AI NYC is hosting another AI Dinner 🍲🍕🍺 , we'll discuss news and updates using this blog post to run the Socratic dialogues.

Event: AI Dinner 15.0

AI use is widespread, but mostly are at early stage experimenting with AI and AI agents. High performers redesign workflows. Only 39% report financial impact (EBIT).

Link: mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai

Extropic just released a new type of hardware called Thermodynamic Sampling Units (TSUs). Their approach, called thermodynamic computing, flips traditional computing on its head. Instead of fighting against the random "noise" (thermal fluctuations) in electronics to force clean 0s and 1s, they embrace that noise as the core of the computation.

There's a lot of controversy around it, from the hardware design that looks like a 3d printed mesh with unnecessary symbols around it, to the obfuscated technical details.

OK let's see how it works (according Extropic writing itself).

The Hardware Foundation: Probabilistic Bits (p-bits) in CMOS Chips

The Computation Process: Annealing via Energy Minimization

The Software Layer: Denoising Thermodynamic Models (DTMs)

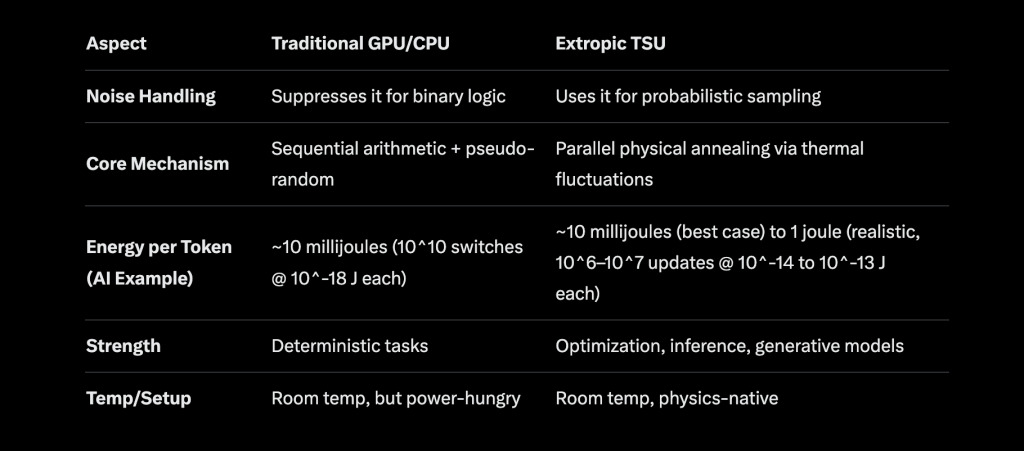

Comparison between regular GPU/CPU and TSU

Extropic also released a probabilistic programming language and a python library to simulate how it runs. How to run THRML by David Shapiro.

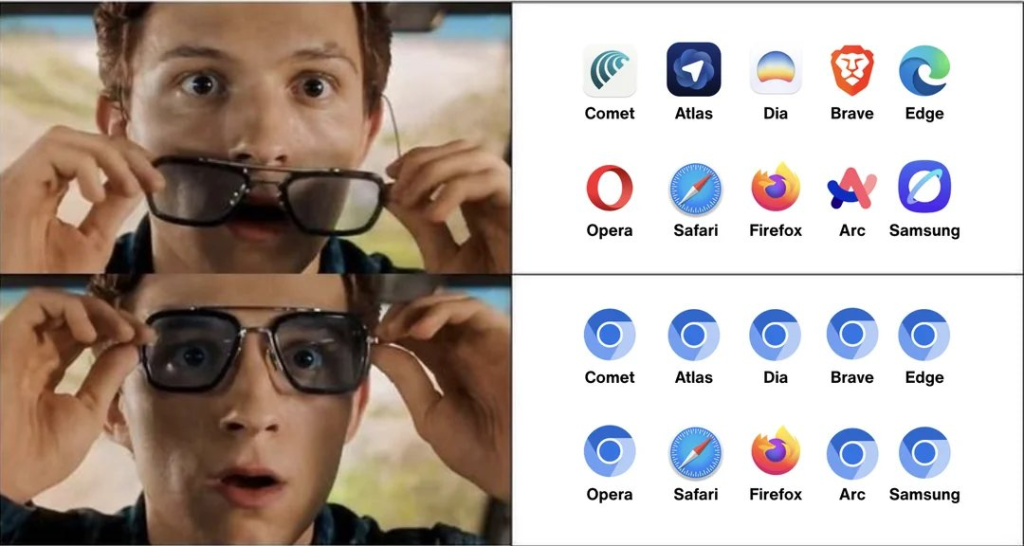

In the past few months we've seen a lot of new AI browser coming up. They're all chromium copy with extra AI features. Google has yet to upgrade Chrome with AI capabilities. Let's explore the new browsers:

The Internet Company Of New York — yes that's the name — started the AI browser trend with Arc Browser, and then evolved into Dia. The main feature of Dia is the AI sidebar that lets you talk with one or multiple pages at the same time. Dia was purchased recently by Atlassian — sadly for me, because it was my browser of choice and Atlassian reputation for high quality software is not the best, if you ever used JIRA you know what I mean.

Perplexity AI is expanding from search engine into other sectors, trying to capture a piece of the pie — and if you ask me, I believe they're trying to get purchased by one of the MANGO companies. The main feature of Comet is the AI assistant that lets you automate email/calendar/shopping.

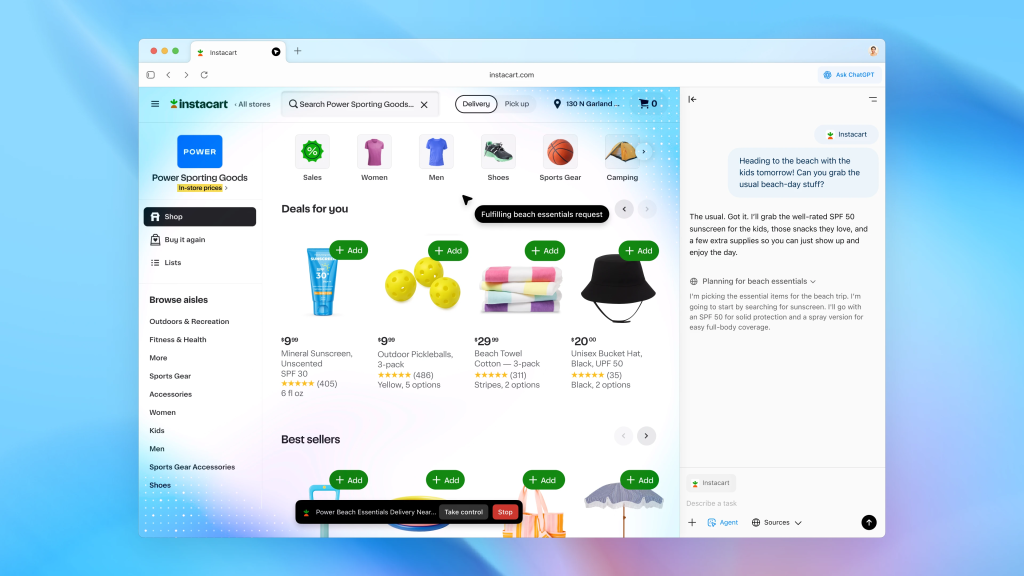

OpenAI launched Atlas in October. It much integrates ChatGPT in every page and it allows you to run AI agent observing what it does in the browser. It looks impressive at first, I've asked to duplicate the last Luma AI dinner event: it opened Luma, signed up, went to the settings page, and somehow it got stuck in a loop. It has a button "stop" that lets you take control, so at least for now you can stop it, continue the tasks manually, and then ask it to continue the automation.

Whatever browser you're using today, switching won't give you a 10x improvement, at least won't give you anythig more than just installing the chatgpt extension. But it's clear that the internet "explore and click" as we know it is going to change into an intent based internet.

| The best ChatGPT that $100 can buy.

⭐️ Andrej Karpathy, our AI legend, just dropped nanochat, a complete, end-to-end implementation of an LLM-based chat assistant like ChatGPT — but compact, clean, and easy to hack. The entire stack, from tokenization to web UI, is implemented in one minimal codebase with almost no external dependencies.

It’s designed to run on a single 8×H100 node, orchestrated by simple scripts like speedrun.sh, which execute the entire lifecycle — tokenization, pretraining, finetuning, evaluation, inference, and even web serving through a lightweight chat interface.

In short, Nanochat lets you train, run, and chat with your own LLM — all for about the cost of a weekend GPU rental.

⭐️ Read the this introduction doc to learn all the steps Nanochat execute with the speedrun.sh file: github.com/karpathy/nanochat/discussions/1.

Repo: github.com/karpathy/nanochat

Nanochat test: nanochat.karpathy.ai

DeepSeek AI has unveiled DeepSeek-OCR, a groundbreaking approach to compressing long contexts via optical 2D mapping. This innovative system demonstrates that vision-based compression can achieve remarkable efficiency in handling text-heavy documents, potentially revolutionizing how large language models (LLMs) process extensive textual information.

The DeepSeek-OCR system consists of two primary components: DeepEncoder and DeepSeek3B-MoE-A570M as the decoder. Together, they achieve an impressive 97% OCR precision when compressing text at a ratio of less than 10× (meaning 10 text tokens compressed into 1 vision token). Even at an aggressive 20× compression ratio, the system maintains approximately 60% accuracy.

Karpathy questions if all LLMs input should actually be images, the advantages are:

Links

· Claim: Decoder‑only transformer LMs are almost‑surely injective: different prompts map to unique last‑token hidden states; this holds at initialization and is preserved under gradient descent.

· Method: Prove components are real‑analytic, show collisions occur only on a measure‑zero parameter set, and that GD updates don’t move parameters into that set in finite steps.

· Evidence: Billions of collision tests on six SOTA LMs found no collisions.

· Algorithm (SipIt): Reconstructs exact input text from hidden activations by exploiting causality; sequentially matches each token’s hidden state given the known prefix; offers linear‑time guarantees.

· Failure cases: Applies to decoder‑only transformers with analytic activations and continuous initialization; quantization, weight tying, duplicated embeddings, or non‑analytic parts can break injectivity. OK there are ways to preserve "privacy" to the question.

Paper: arxiv.org/abs/2510.15511

Instead of simulating clicks and scrolls, researchers let LLMs reason which playlist, feed, or product lineup you’d actually prefer.

And it worked. Across Amazon, Spotify, MovieLens, and MIND datasets, they found:

link: x.com/alxnderhughes/status/1988202281314251008

This paper got top score at NeurIPS 2025. It aims at answering: does RL make LLM better reasoners?

The authors study Reinforcement Learning with Verifiable Rewards (RLVR) and find that while it improves accuracy for small k, it doesn’t create new reasoning patterns — meaning the base model still determines the upper limit of reasoning ability.

Interestingly, it’s distillation, not RL, that shows genuine signs of emergent reasoning 😮.

link: x.com/jiqizhixin/status/1987710546674856051

web: limit-of-rlvr.github.io

Tencent + Tsinghua just dropped a paper called Continuous Autoregressive Language Models (CALM) and it basically kills the “next-token” paradigm every LLM is built on.

Instead of predicting one token at a time, CALM predicts continuous vectors that represent multiple tokens at once.

Meaning: the model doesn’t think “word by word”… it thinks in ideas per step.

→ 4× fewer prediction steps (each vector = ~4 tokens)

→ 44% less training compute

→ No discrete vocabulary pure continuous reasoning

→ New metric (BrierLM) replaces perplexity entirely

link: x.com/rryssf_/status/1985646517689208919

Perplexity in NLP measures how well a language model predicts text; lower means better.

https://www.youtube.com/watch?v=iO03t21xhdk&feature=youtu.be&themeRefresh=1

https://www.youtube.com/watch?v=29gkDpR2orc&t=890s

MLST — AI benchmarks are broken! [Prof Melanie Mitchell]

I really love this part of the MLST interview in which Prof Mitchell says the key LLM question is: what kind of “understanding,” if any, is really going on?

https://youtu.be/fS-NN6VRzT8?si=0SJl24g9cIW1IVwm

In search of Nothing | David Deutsch, Lee Smolin, Amanda Gefter

https://www.youtube.com/watch?v=rMSEqJ\_4EBk

MLST — Google Researcher Shows Life "Emerges from Code"

Blaise Agüera y Arcas explores some mind-bending ideas about what intelligence and life really are—and why they might be more similar than we think

Get the latest AI insights delivered to your inbox. No spam, unsubscribe anytime.

Founder, Engineer

AI Socratic

Founder of AI Socratic

Top AI updates from Jan 15 to Feb 15 2026

Claude Code, Ralph Wiggum, DeepSeek mHC, Platonic Representation Hypothesis and more

The most important AI news and updates from last month: Nov 15 - Dec 15. GPT-5.2, Opus 4.5, Gemini 3, the Agentic IDE Wars, Genesis Mission, and more.